i meant: they typically use frequentist error rates etc when evaluating bayesian designs: eg paper, thus synchronised

being penalised for peeking seems intuitive(?), and “spending the alpha” is a nice safeguard, especially in drug development where minimising sample size limits safety data

I question Berger and Delampaday in a formal sense. And I know (to the extent it’s possible to know) about small drug effects from being involved in a multitude of studies over decades, plus it follows from biology when a drug has any activity of any kind, if you can measure things well enough. This is not just semantics. Null hypothesis testing depends on this for part of its meaning, and many a time has a null been rejected because of a clinically trivial effect.

1 Like

It is intuitive and a safeguard if you emphasize reverse information-flow probabilities.

1 Like

“Conclusions Most large treatment effects emerge from small studies, and when additional trials are performed, the effect sizes become typically much smaller. Well-validated large effects are uncommon and pertain to nonfatal outcomes.” [jama]

That’s precisely my point. Large effects are rare. Most drugs don’t work very well, and quite a lot don’t work at all,Think Tamiflu for example. It seems to me to be pharmacological nonsense to think that there is no such thing as false positive (as I’ve heard some statisticians say) on the grounds that anything you take must have some effect, Perhaps mathematicians have a rosier view of drugs than pharmacologists do,

1 Like

Paul’s comment is not about the true drug effect but more about the estimated effect. Estimates are clearly overestimates of true effects when there is cherry picking/publication bias, and this is pronounced for small studies. The question remains about whether true effects are non-zero… Tamiflu is perhaps a good example of a drug whose effect was vastly overrated, but the real effect remains non-zero.

1 Like

I think it’s very debatable whether or not the true effect is nom-zero

1 Like

I see that some of my posts here have been hidden because they were “flagged by the community as spam”.

I’m aware that arguments can get vicious in statistics, but I have never encountered this sort of behaviour anywhere else,

1 Like

surely a mistake… we need this discussion

Good discussion, must have been a mistake and will hopefully promptly be rectified

The system automatically flags when there are multiple posts from the same person and these posts have URL reference links in common (e.g. more than one post referenced arXiv). It was nothing to do with actual post content. I overrode the system so the posts should now appear.

Zero is too special an effect to occur very often. It’s too specific, and we know that drugs have even unintended effects that are nonzero. But we’re getting away from the topic of the discussion which is measuring quality of decisions for different statistical approaches.

aha thank you very much. Links could be advertisements, but surely more often they are references and therefore to be encouraged.

i find these results surprising, but maybe i shouldn’t: Likelihood Based Study Designs for Time-to-Event Endpoints, Jeffrey D. Blume

“the accumulating data can be regularly examined for strong evidence supporting either pre-specified hypothesis over the other; the chance of observing misleading evidence remains low.”

although if we consider “any alternative hypothesis that indicated the

hazard ratio is less than one” then: “if we used a delayed sequential design that does not allow the data to be examined until 10 events have been observed, then the probability of being misled drops to a reasonable 5.62% or less” [from 11.24%]. 11% seems high, although taken in the context of a cancer trial it seems reasonable

surely the strongest argument against frequentism is this flexibility

1 Like

That’s an excellent point. The likelihoodist school, like Bayesianism, respects the likelihood principle, so no adjustment is needed for multiplicity or for stopping rules. But the work of Jeffrey Blume and Richard Royall show that even if you want to preserve frequentist properties, non-frequentist approaches do a surprisingly good job. In Blume’s work on high-dimensional hypothesis testing (say for screening voxels in fMRI), a simple likelihood ratio approach has lower type I error and lower type II error than frequentist approaches, and these errors converge to zero as n \rightarrow \infty. Contrast with the frequentist approach in which we allow a substantial, constant type I error probability.

2 Likes

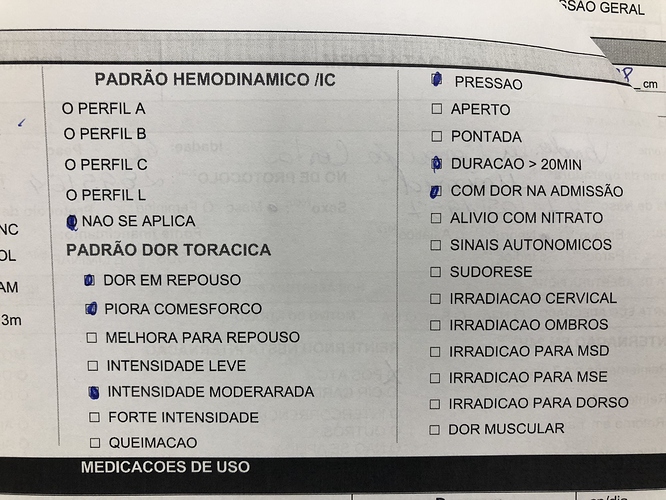

Whenever we are explaining to students about chest pain we talk about predictive values of the clinical history of chest pain. But in collecting the story we know that it is impossible to apply negative predictive value to the characteristics of pain. Being that a typical pain has a very high relation with epicardial lesions and ischemic load. how could we do this data analysis of the clinical history? any suggestion?