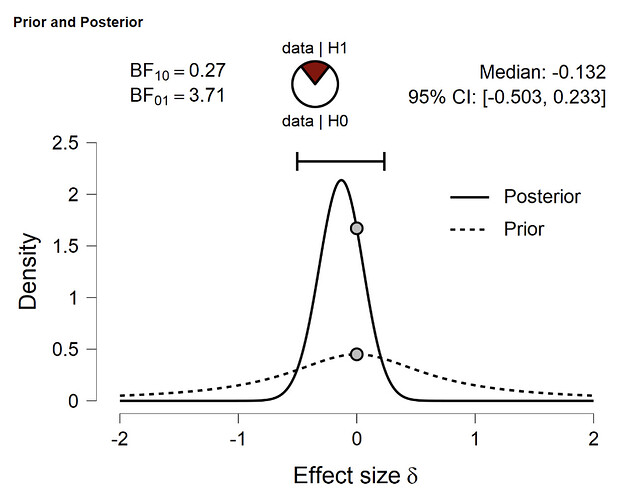

I calculated multiple Bayesian paired samples t-test. My results support the H0. I read it doesn’t make sense to report the median posterior Cohen’s δ and the 95% credible interval in this case. Does this mean that I can only report the Bayes factor and should basically ignore the whole plot with prior and posterior distribution? (δ varies between 0.01 and 0.32 in my analyses while H0 being supported weakly or moderately.)

I was also wondering: Are the benchmarks for Cohen’s δ and Cohen’s d the same?

I forgot to add: the 95% CI always includes 0 in my case.