I would like to compare two repeated-measures experiments to test whether the change in conditions resulted in a significant (at say alpha .05) change in either/both inter- and intra-rater reliability.

Fortunately this experiment only had two repetitions of measurement by two observers. However, the outcome is categorical, which seems to preclude Bland-Altman visualization and limits of agreement testing.

I imagine that the most accurate approach to simultaneously determine both inter- and intra-rater ICCs in this setting is that detailed by Eliasziw et al. 25 years ago:

Eliasziw M, Young SL, Woodbury MG, Fryday-Field K. Statistical methodology for the concurrent assessment of interrater and intrarater reliability: using goniometric measurements as an example. Physical therapy. 1994 Aug 1;74(8):777-88.

Fortunately CRAN has implemented this in the relInterIntra function (relInterIntra source code) in the IRR package (documentation p 26-27 here).

To compare my two experiments say for statistically significant difference of the intrarater reliability, I would check that there is distance from the upper bound of the first to lower bound of second experiment at the designated two-tailed alpha level.

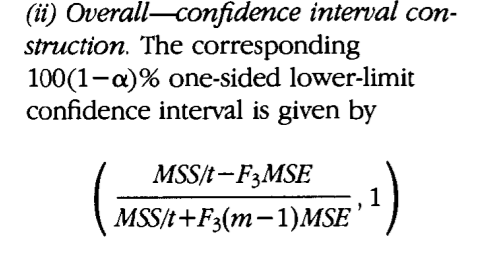

However, neither the paper by Eliasziw and colleauges nor the IRR package considered the case of calculating the UPPER confidence limit. Instead, a one-tailed test is used to find the lower limit:

where t is the number of observers, n is the number of subjects, m is the number of repetitions, and F3 is the 100(1 -alpha)th percentile point of the F distribution on

(n - 1) and n (m- 1) degrees of freedom.

I assume there is no convenient R package to get the two-sided limits in the simultaneous intra- and inter-rater ICC calculation setting. Therefore, I am thinking to go to the equation I have pasted above, and tweak it such that:

- lower bound: F3 uses alpha replaced by alpha/2 to get the two-sided CIs: 100(1 -alpha/2)th percentile

- upper bound: same equation is used but F3 becomes the 100(alpha/2)th percentile

A concern with the above approach is that these steps provide the full confidence interval for the ICC from each experiment individually. To compare the hypothesis of difference in the ICC between two experiments, I think the alpha for the confidence intervals in reality may need to be drawn LARGER than the desired hypohthesis alpha. For an alpha .05 Goldstein and Harvey argued for using z-score of 1.39 to draw CIs for graphical testing, rather than the traditional two-tailed 1.96:

Goldstein H, Healy MJ. The graphical presentation of a collection of means. Journal of the Royal Statistical Society: Series A (Statistics in Society). 1995 Jan;158(1):175-7.

https://rss.onlinelibrary.wiley.com/doi/abs/10.2307/2983411

We would also like to report the corresponding inter- and intra-rater standard errors of the measurement (SEM), but Eliasziw’s paper did not outline any sort of method for determining CIs on that.

Any other suggestions as to simultaneous comparisons of inter and intra-rater ICCs and SEMs across multiple experiments would be very welcome.