When performing logistic regression, epidemiologists and other researchers are usually interested in the Odds Ratio (OR) estimates rather than the regression coefficients. As we all know, OR is esimated by exp(beta estimate). Most statitical software provide beta estimates, alog with the stndard errors of these estimates, and usually a confidence interval for beta is beta plus/minus 1.96SE(beta).

A confidence interval for the OR is howevr more tricky. Some references adise to o take the CI for beta and just exponent it, so a CI for the OR would be exp[beta plus/minus 1.96SE(beta)]. This method has a nice property: if the CI for beta does not contain zero, so the exponented CI does not contain Y. This is how SPSS, for example, calculates a CI for the OR.

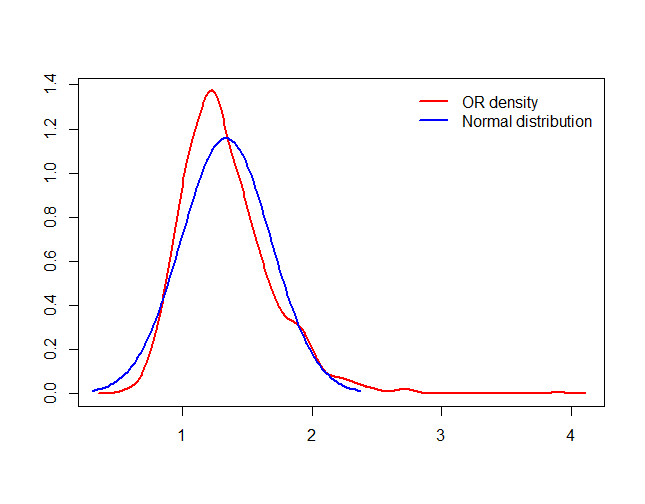

A different approch is to apply the delta method. When applying the method one gets that

SE(exp(beta))=SE(OR)=se(beta)OR

So a CI for OR would be OR +/- 1.96se(beta)*OR

However: 1) It is possible that the lower CI will be negative. 2) It is possible that although the CI for beta does not contain zero, this CI will contain 1.

Apparently, epidemiologists think that this is a problem.

As a statistician, I do not see any problem, and my recommendation is to use the delta method. But… what would you recommend? and if you favor the delta method, how would you cummunicate this?

) while the other one is statistically significant. So what would you do?

) while the other one is statistically significant. So what would you do?