P Natarajan, R Young, NO Stitziel, S Padmanabhan, U Baber, R Mehran, S Sartori, V Fuster, DF Reilly, A Butterworth, DJ Rader, I Ford, N Sattar and S Kathiresan,

Circulation, May 30 2017

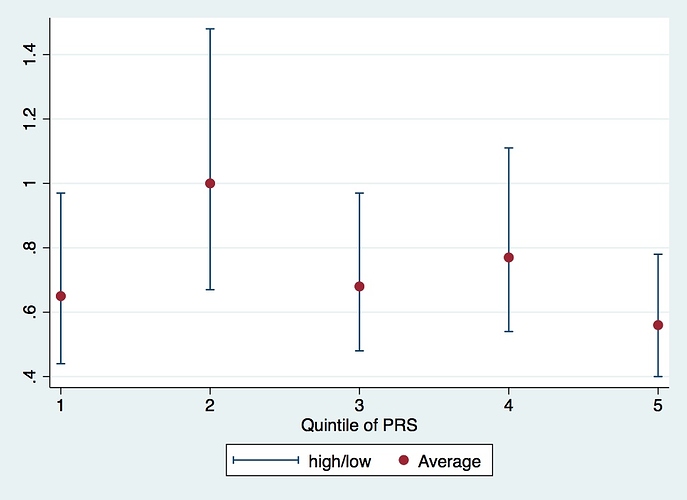

Relative risk reduction with statin therapy has been consistent across nearly all subgroups studied to date. However, in analyses of 2 randomized controlled primary prevention trials (ASCOT [Anglo-Scandinavian Cardiac Outcomes Trial-Lipid-Lowering Arm] and JUPITER [Justification for the Use of Statins in Prevention: An Intervention Trial Evaluating Rosuvastatin]), statin therapy led to a greater relative risk reduction among a subgroup at high genetic risk. Here, we aimed to confirm this observation in a third primary prevention randomized controlled trial. In addition, we assessed whether those at high genetic risk had a greater burden of subclinical coronary atherosclerosis.We studied participants from a randomized controlled trial of primary prevention with statin therapy (WOSCOPS [West of Scotland Coronary Prevention Study]; n=4910) and 2 observational cohort studies (CARDIA [Coronary Artery Risk Development in Young Adults] and BioImage; n=1154 and 4392, respectively). For each participant, we calculated a polygenic risk score derived from up to 57 common DNA sequence variants previously associated with coronary heart disease. We compared the relative efficacy of statin therapy in those at high genetic risk (top quintile of polygenic risk score) versus all others (WOSCOPS), as well as the association between the polygenic risk score and coronary artery calcification (CARDIA) and carotid artery plaque burden (BioImage).Among WOSCOPS trial participants at high genetic risk, statin therapy was associated with a relative risk reduction of 44% (95% confidence interval [CI], 22-60; P<0.001), whereas in all others, the relative risk reduction was 24% (95% CI, 8-37; P=0.004) despite similar low-density lipoprotein cholesterol lowering. In a study-level meta-analysis across the WOSCOPS, ASCOT, and JUPITER primary prevention, relative risk reduction in those at high genetic risk was 46% versus 26% in all others (P for heterogeneity=0.05). Across all 3 studies, the absolute risk reduction with statin therapy was 3.6% (95% CI, 2.0-5.1) among those in the high genetic risk group and 1.3% (95% CI, 0.6-1.9) in all others. Each 1-SD increase in the polygenic risk score was associated with 1.32-fold (95% CI, 1.04-1.68) greater likelihood of having coronary artery calcification and 9.7% higher (95% CI, 2.2-17.8) burden of carotid plaque.Those at high genetic risk have a greater burden of subclinical atherosclerosis and derive greater relative and absolute benefit from statin therapy to prevent a first coronary heart disease event.URL: http://www.clinicaltrials.gov. Unique identifiers: NCT00738725 (BioImage) and NCT00005130 (CARDIA). WOSCOPS was carried out and completed before the requirement for clinical trial registration.