Regression Modeling Strategies: Binary Logistic Regression

This is the tenth of several connected topics organized around chapters in Regression Modeling Strategies. The purposes of these topics are to introduce key concepts in the chapter and to provide a place for questions, answers, and discussion around the chapter’s topics.

Additional links

RMS10

@f2harrell I’m looking for a single “holy grail” performance metric that can be used to compare binary, ordinal, and regression models. Here’s a hopefully realistic scenario that might explain my crazy quest.

In your class, I asked a question about how to properly model financial stock prices. Very often, financial analysts convert the data into a binary “up” or “down” and then try to predict based on various input factors if the stock price goes up or down. You corrected my perspective by explaining that rather than committing the cardinal sin of dichotomizing the continuous stock price data, we should use T1 prices as an input feature (along with any other features of interest) to predict T2 prices. That way, all the the information in the prices is preserved.

So, I want to test if this is truly a superior way to analyze the data. Concretely, I have in mind to reanalyze an article that dichotomized the data and then hopefully show that predicting prices is superior. To do this, I would like to compare three models:

- Binary: T2_binary_up_or_down ~ T1_X

- Ordinal: T2_bigRise_smallRise_approxZero_smallDrop_bigDrop ~ T1_X

- Continuous: T2_price ~ T1_X + T1_price

Essentially, the three models are the same in using the vector of T1_X as input features; the only difference is the amount of information contained in the price (which is stripped of information in the binary and ordinal models). They would all involve the number of rows of data and exactly the same missingness structures.

For a valid comparison, I would like a single performance measure that can evaluate all three models on the same scale. Does such a single measure exist?

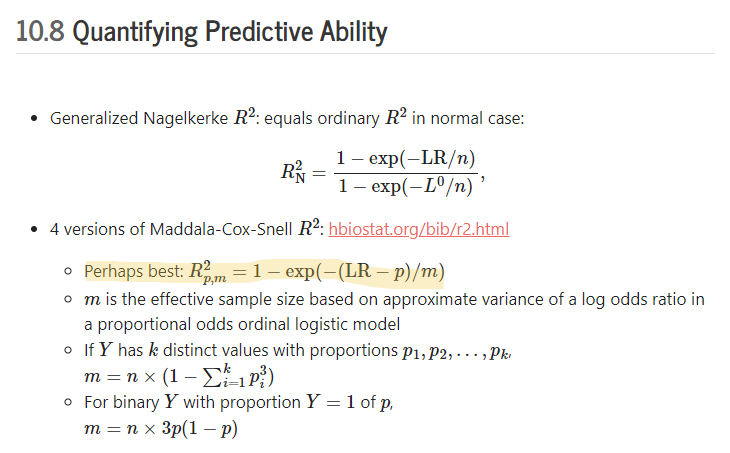

I seem to remember that you recommended the following highlighted R^2 formula:

Is this (or something else) appropriate for my scenario?

I’ve been looking at the Hmisc::R2Measures function, but I can’t seem to figure out how it works; in particular, I don’t understand how to supply the lr argument, especially for continuous models. If something here does the job, could you please help guide me?

At one point I thought that Somers’ D_{xy} rank correlation between predicted and observed was the way to go (simple translation of AUROC for binary Y). But when computed for binary Y it’s bigger than for multi-level Y because predicting a binary outcome is so much easier. Similarly the R^{2}_\text{adj} as you specified is going to make it too easy on coarser Y because it uses the effective sample size instead of the sample size. So for your purpose I would use the traditional R^2 from econometrics (Maddala-McGee etc.) with the actual sample size n used. This will be equivalent to just using the LR \chi^2 statistic for each model. More sensitive models will have larger LR.

Another approach is to consider an effect that is subtle (e.g., some weak but interesting predictor variable, choosing it based only on subject matter knowledge) and show that patterns can be discerned with continuous Y but not with very coarse Y.

I’ll have to get to the R2Measures question later.

Thanks for your response. What exactly do you mean by “the traditional R^2 from econometrics (Maddala-McGee etc.) with the actual sample size n used”? Is there a precise formula for that? Even better, is there an R function for that? Does this work for continuous, ordinal, and binary variables, or is it just for continuous, and then it is equivalent to chi-square for binary?

It’s described in the r2.html page. It’s equal to the last one I listed (my favorite) but reverting back to the apparent sample size.

Would you mind summarizing what steps need to be taken to come up with sample size for a logistic regression for an effect? I did not really understand it from what we adressed yesterday…

Would you mind summarizing what steps need to be taken to come up with sample size for a logistic regression for an effect? I did not really understand it from what we adressed yesterday…