Statisticians, clinical trialists, and drug regulators frequently claim that they want to control the probability of a type I error, and they go on to say that this equates to a probability of a false positive result. This thinking is oversimplified, and I wonder if type I error is an error in the usual sense of the word. For example, a researcher may go through the following thought process.

I want to limit the number of misleading findings over the long run of repeated experiments like mine. I set \alpha=0.05 so that only \frac{1}{20}^\text{th} of the time will the result be “positive” when the truth is really “negative”.

Note the subtlety in the word result. A result may be something like an estimate of a mean difference, or a statistic or p-value for testing for a zero mean difference. \alpha deals with such results. This alludes to the fraction of repeat experiments in which an assertion of a positive effect is made. But what most researchers really want is given by the following:

I want to limit the chance that the treatment doesn’t really work when I assert that it works (has a positive effect).

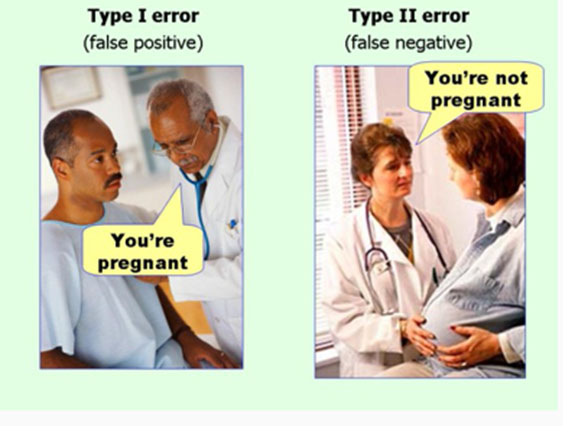

This alludes to a judgement or decision error — the treatment is truly ineffective when you assert that it is effective.

When I think of error I think of an error in judgment at the point in which the truth is revealed, e.g., one decides to get a painful biopsy and the pathology result is “benign lesion”. So to me a false positive means that an assertion of true positivity was made but that the revealed truth is not positive. The probability of making such an error is the probability that the assertion is false, i.e., the probability that the true condition is negative, e.g., a treatment effect is zero.

Suppose one is interesting in comparing treatment B with treatment A, and the unknown difference between true treatment means is \Delta. Let H_0 be the null hypothesis that \Delta=0. Then the type I error \alpha of a frequentist test of H_0 using a nominal p-value cutoff of 0.05 is

P(test statistic > critical value | \Delta=0) = P(assert \Delta \neq 0 | \Delta=0) = P(p < 0.05)

The conditioning event follows the vertical bar | and can be read e.g. "if \Delta=0". \alpha is 0.05 if the p-values are accurate and the statistical test is a single pre-specified test (e.g., sequential testing was not done).

On the other hand, the false positive probability is

P(\Delta = 0 | assert \Delta \neq 0) = P(\Delta = 0 | p < 0.05)

This false positive probability is arbitrarily different from type I error, and can only be obtained from a Bayesian argument.

My conclusion is that even though many researchers claim to desire a type I error, what they are getting from the type I error probability \alpha is not what they really wanted in the first place. Thus controlling type I error was never the most relevant goal. Type I error is a false assertion probability and not the probability that the treatment doesn’t work.

As as side note, treatments can cause harm and not just fail to benefit patients. So the framing of H_0 does not formally allow for the possibility of harm. A Bayesian posterior probability, on the other hand, would be P(\Delta > 0) = 1 - P(\Delta \leq 0) = P(treatment has no effect or actually harms patients). This seems to be a more relevant probability than P(H_0 is true).