Blockquote

I would appreciate if you can help me understand. Philosophically – not statistics or some algebraic expression – I am doing a little experiment with GLM, my data is fixed … I cannot change it and my formula for this is fixed.

A subtle point is that your data are a considered a sample from a hypothetical distribution for statistical purposes. Your data was one subset of a multitude of possible ones. This is a critical point that will be important a bit later.

Blockquote

I get a p-value and I use that p-value to reject the NULL or not reject the NULL.

This is an extremely narrow understanding and interpretation of classical hypothesis tests. Suffice it to say – there are 2 critical perspectives on this. The Neyman-Pearson decision theory viewpoint that compresses (some might say distorts) results into reject/fail to reject categories. Then there is the perspective of R.A. Fisher, where a p-value is a quantitative measure of surprise, conditional on the truth of an asserted probability distribution as reasonably accurate model of the situation.

Blockquote

Am I doing something naive (or statistically incorrect) interpretting p-value as I did?

You are confusing the p-value as a conditional, continuous measure of surprise, with its association to a dichotomous decision rule. The tradition of mapping arbitrary p-value levels (0.5, 0.01, etc.) to reject/fail to reject decisions absent hard thinking about the context is unjustified, but very common.

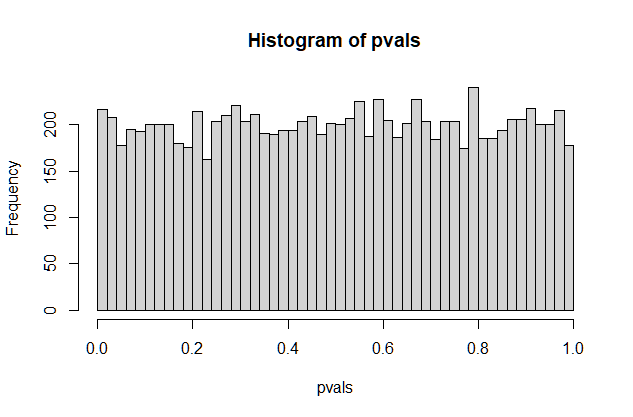

RE: your sample as fixed. Modern statistical methods treat your “fixed” sample as a population to be sampled from. Instead of making a mathematical assumption about the population that generated your data, you can create new samples using computer programs, repeat the analysis, and calculate the relevant statistics. If your sample was representative of the population, these resamples will also be.

These are known as “resampling” methods, of which there are 2 main ones for estimation: resample with replacement (bootstrap) and resample without (jackknife).

For testing, you can perform permutation tests, which will create an empirical null distribution that you can compare your observed data to, without needing to assume anything about the data generation process, except that the groups are “exchangeable”.

The following is a good paper (no pun intended) that will help you understand p-values; it explores this from the perspective of resampling, so no algebra is needed.

Goodman. W (2010) The Undetectable Difference: An Experimental Look at the “Problem” of

p-values. JSM 2010, Session #119. (link)