In the proton radius* example I’ve been discussing, the CREMA group did challenge well established, reproducible findings made over 60 years. Previous experimental measurements of proton radius were based on two classes of methods: hydrogen spectroscopy and electron scattering. CREMA introduced a variation on spectroscopy by using muonic hydrogen and, almost by accident, decided at the last minute (and deviating from the prespecified plan) to also scan parameter regions that had been thought to have been ruled out by previous experiments. As a result they reported an estimate lower than the internationally accepted value. At this point, nobody should have been betting on anything. Instead, people did the obvious - make new attempts using the established methods…and lo and behold, their results started to move towards the lower value! Nonetheless the CREMA result was excluded from the internationally accepted “best fit” estimate for several cycles of updates. Except for this last nuance, which may reflect “groupthink” more than anything else, this is precisely how such discrepancies ought to be resolved. No betting needed.

*I mistakenly referred to this as “proton mass” in some of my earlier posts.

In experimental life sciences, an even more interesting example is provided by Lithgow, Driscoll, and Phillips, who represent competing labs working on roundworm lifetime studies. The paper is short and nontechnical, so I won’t summarize it here.

https://www.nature.com/articles/548387a

Yet another example is discussed in the 2017 article by Gunter and me, linked above, where we write:

Consider this: to get a better handle on the “reproducibility” of published research, the Reproducibility

Project has set about trying to reproduce some prominent results in cancer biology. At the time of writing, they claim that four of seven reports were reproduced. However, Jocelyn Kaiser reports in Science that: “Some scientists are frustrated by the Reproducibility Project’s decision to stick to the rigid protocol registered with eLife for each replication, which left no room for troubleshooting.” In other words, pre-specification is good for inference, but bad for science.

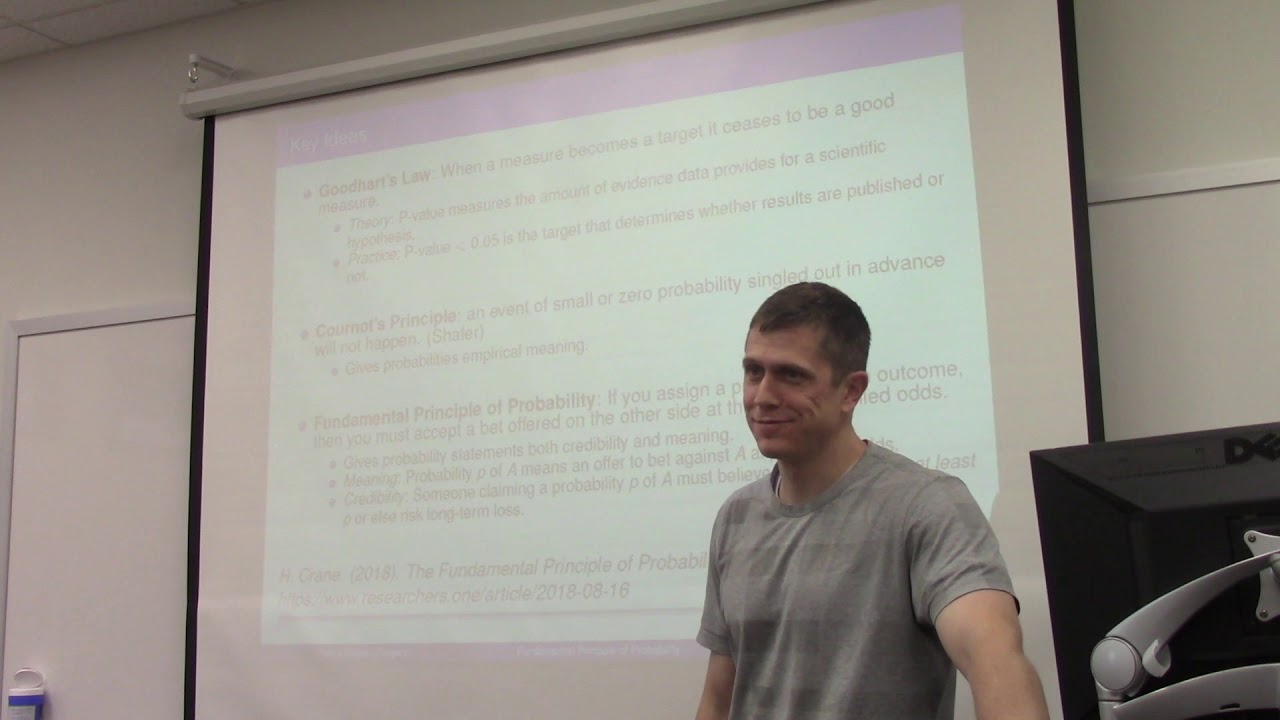

FPP also requires the original authors to prespecify the protcol for any replication attempt. Thus a third problem with FPP is that it does not show how discrepancies can and should be resolved, while the examples I posted here do. FPP focuses on a vary narrow vision of “replicability” driven by statistical inferences, which doesn’t seem to respect the lesson he himself presents as Goodhart’s Law: “When a measure becomes a target, it ceases to be a good measure.” Instead, scientists should focus on the nuts and bolts, as the proton radius and roundworm liftetime examples illustrate. More promising (to me) institutional procedures to stimulate/incentivize appropriate attitudes include the following:

https://www.nature.com/articles/542409a

https://journals.sagepub.com/doi/full/10.1177/0149206316679487

(Incidentally, the Reproducibility Project is now complete, and the results are not particularly encouraging. Reproducibility Project: Cancer Biology | Collections | eLife )

FPP would not be applicable to much of the social sciences for a different reason - these sciences (eg, much of empirical economics) depend on observational data. “Replication” in these settings will always be muddied by confounding - a new population would need to be sampled, differing in either time, geography, market conditions, or other factors. Following exactly the same replication protocol could be expected to provide different results, and it won’t be clear if the difference is due to the confounding effects, or to fundamental flaws of the original study. FPP is a non-starter for such research (though, the same critique would likely hold for the Mogil/Macleod proposal I linked above).

Finally, it should be reiterated that Crane’s critique of Naive Probabalism (which he wrote subsequent to the FPP paper) is basically what I’ve been railing about on this thread from the beginning (though I didn’t know the term until @R_cubed helpfully made me aware of it; I’d been using Taleb’s related but more obscure term “the ludic fallacy”). I quote Crane’s first paragraph.

Naive probabilism is the (naive) view, held by many technocrats and academics, that all rational thought boils down to probability calculations. This viewpoint is behind the obsession with `data-driven methods’ that has overtaken the hard sciences, soft sciences, pseudosciences and non-sciences. It has infiltrated politics, society and business. It’s the workhorse of formal epistemology, decision theory and behavioral economics. Because it is mostly applied in low or no-stakes academic investigations and philosophical meandering, few have noticed its many flaws. Real world applications of naive probabilism, however, pose disproportionate risks which scale exponentially with the stakes, ranging from harmless (and also helpless) in many academic contexts to destructive in the most extreme events (war, pandemic).