Thank you for your help. I’ve found the problem - it was the fit method. I’d changed Newton conjugate gradient to Basin Hopping optimization and obtained similar values as in rms package.

Yours sincerely,

Thank you for your help. I’ve found the problem - it was the fit method. I’d changed Newton conjugate gradient to Basin Hopping optimization and obtained similar values as in rms package.

Yours sincerely,

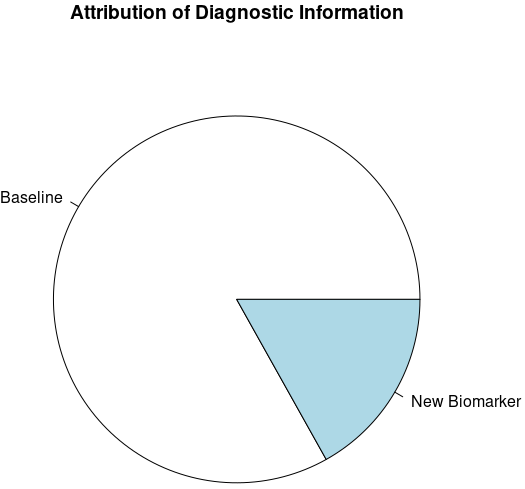

To emphasize the “Analysis Conclusions” (namely, “It adds a fraction of 0.17 of new diagnostic information to age and sex.”) in the case study, I would like to suggest adding a simple pie chart as visual information. For example,

a <- 1 - var(pre) / var(post) # 0.17 ("fraction of new diagnostic information")

pie(c(1 - a, a), labels = c("Age + Sex", "Cholesterol"), main = "Attribution of Diagnostic Information")

In general, a pie chart like this may convey the value of any added “biomarker”:

pie(x=c(1-a, a), labels = c("Baseline", "New Biomarker"), main = "Attribution of Diagnostic Information")

Would this be a good idea? Any other suggestions? Thank you very much, Dr. Harrell!

Not a good idea. Ink:information ratio is nearly infinite. It’s a single number! Pie charts are highly inaccurate in terms of how humans perceive them. Among other things we perceive different slice areas depending on how the pie is rotated. Length along a common scale is the guiding principle to use if a graphic is needed.

Thanks a lot for your reply! I agree the ink:information ratio is appalling (although the “code:information” ratio makes it an attempting try ![]() ). Thanks for your suggestions!

). Thanks for your suggestions!

The following Q&A is an archive of older discussions that will be lost when Statistically Efficient Ways to Quantify Added Predictive Value of New Measurements | Statistical Thinking is moved to a new blog platform.

From Ali: Fantastic post! Just one question regarding the c-index measure; although I perfectly understood (and concur with) your hesitation to use them, I am a bit confused when comparing it to Harrell et al. 1988 paper, in which increase in c-index was used to assess the added (prognostic) value of treadmill test in determining 5-year survival. Could you elaborate please?

FH Reply: Great question! Quite simply I was wrong to emphasize the use of the c-index to measure added information. I should have stopped with the idea of using it to quantify pure predictive discrimination of a single model/set of variables. If there is an increase in c you have evidence for added predictive information, but if c doesn’t increase you don’t have evidence for no added value, because c is insensitive.

Ali Follow-up: Thanks for the clear explanation!

So, this brings me to my next question (sorry in advance if it’s a very basic question, I’m not a statistician): Does difference in sample size between the baseline model and the model with the additional biomarker data make a difference here? I’m asking since often in a medical setting additional biomarker data can only be obtained for a subset of patients (due to biomarker acquisition costs for example).

So in such a case, would you compare the baseline model with full observations (n1) with the biomarker-enhanced model (n2<n1), or would you use the same patient population (n2) in both and risk lowering the statistical power of the baseline model (i.e., lowering the bar)? p.s. i’d missed the part where you mentioned (the metrics are sample size-independent); so i guess i know the answer … ![]()

FH Response: You probably need to use the smaller sample size throughout.

Ali: Thanks Frank! Very helpful. Just one last question that just came to mind (apologies for serial questions, feel free to ignore):

I know the goal is not to report the absolute predictive power of the new variable, but the “additional” value compared to baseline; but I’m wondering whether the difference in predictive power is better explained by reporting the same statistics (change in variance, etc.) on the “validation set” as opposed to whole training data?

FH: And I have sort of mixed the ideas of total value and added value in the article. Yes these statistics work even better on independent validation samples. Of course in most instances strong internal validation is better (and it’s certainly faster at least). You can use the bootstrap to get overfitting-corrected versions of any of the indexes I described.

Bob Carpenter: I desperately needed this 20 years ago when I was starting to work on machine learning for natural language classifiers. Instead, I received a litany of model evaluation options, including the dreaded F-measure (harmonic mean of precision and recall) so beloved by natural language processing researchers.

The model comparison approach we recommend for Stan is based on the posterior predictive distribution,

p(y’ | y) = INTEGRAL_theta p(y’ | theta) p(theta | y) d. theta.

Ideally, we evaluate under cross-validation. The widely applicable information criterion (WAIC) is an approximation to cross-validation, and DIC is an even cruder approximation based on a point estimate. These days, we tend to approximate the cross-validation directly using importance sampling in the loo package, which is more robust than WAIC. See Vehtari et al.'s paper: https://arxiv.org/abs/1507… and Stan’s loo package: https://cran.r-project.org/… One problem if you do this on the log scale is that log loss tends to be very flat in the operating range of most classifiers, making it hard hard to detect relatively large differences in estimates. Wang and Gelman have a nice paper on this in a hierarchical modeling context where big differences in subgroup predictions get washed out in the overall measure: http://www.stat.columbia.ed…

We’ve been leaning more toward direct evaluation of the posterior predictive distribution for calibration and sharpness. Gneiting et al. have a nice JRSS paper about this: https://rss.onlinelibrary.w…

Not surprisingly, the same authors have also written about proper scoring rules. We’re also using calibration to validate our algorithms, as described in this paper by Talts et al. which provides a robust form of Cook’s original calibration diagnostics: https://arxiv.org/pdf/1804…

More fundamentally, rather than comparing models and choosing one, we recommend the more practical approach of continuously expanding the model until you stop getting any gains in posterior predictions of interest. When more data shows up, the boundary of that choice will change because it’s based on the posterior predictive distribution. This is largely a consequence of the philosophy of not believing any of our models are true in any scientific sense other than a practical one that allows us to make well-calibrated inferences.

Bayes factors, on the other hand, roll in a term for the prior. This makes them about the prior predictive distribution, and makes them very sensitive to prior assumuptions. While we still don’t like them for model comparison, we’re putting more and more emphasis on prior predictive distributions being sensible as a measure of whether our priors are at least weakly informative about the scale of the data and parameters, which we would like them to be. Here’s a preprint of the Gabry et al. JRSS paper that lays a lot this out in the context of proposing a general Bayesian workflow: https://arxiv.org/abs/1709…

Sorry for dumping a seminar’s worth of reading material into a blog reply.

FH: Bob this is food for thought for many months to come. I really appreciate your sharing this and giving such useful links. I’m just starting to understand WAIC and loo measures. I admit that the Bayesian loo approach is very appealing. But neither of these instantly gives me a sample-size independent measure of something akin to explained variation.

Regarding your comment on building up models, I’ve a nagging concern that the posterior distribution of a given parameter in the final model will not be wide enough to take feature selection uncertainty into account. This is in distinction to formal penalization, e.g., using horseshoe or zero-centered Gaussian priors. I’d appreciate your take on that.

Alex Hayes: It seems like the major difference between the loo approach and likelihood ratio tests (with, say, uniform priors) or AIC comparisons is that loo compares out-of-sample likelihood, and likelihood ratio tests (or AIC comparisons) are in-sample. Can you comment on in-sample vs out-of-sample measures of added value? I’m concerned about overfitting with the in-sample measures.

FH: Super question. I hope someone can find comparative studies in the literature. What I really want to know is which of these approaches misleads us the least: effective AIC (involving -2 LL and effective d.f. upon any penalization) and LOO.

Alex Hayes: I personally find it most intuitive to think of AIC as a unbiased risk estimate, where the de-biasing term / penalty is derived based on your probability model. LOO on the other hand should give you an unbiased risk estimate via sample splitting. The sample-splitting approach should give unbiased estimates under more general conditions, since we just need independence, whereas AIC’s unbiasedness depends on getting the probability model right.

This framing makes most sense if you’re thinking about an arbitrary loss function. In practice, though, both the AIC and LOO use the likelihood as the loss function, so it’s little weird to reduce the dependence of the loss (likelihood) on the probability model. But sometimes likelihood maximization is M-estimator-y; for example, maximizing the likelihood of a Gaussian linear regression is the same as minimizing the RMSE loss. For this kind of model, where likelihood maximization is a good idea irrespective of the probability model, I can imagine there is some advantage to the LOO approach under model misspecification.

This is all more of a thought exercise than anything else, but I do find the question of AIC vs LOO pretty interesting. If you commit to a full likelihood, it’s hard to come up with compelling reasons for AIC over LOO since they should give you about the same thing when the model is correct. But LOO feels like it should be much better somehow. I imagine that there is some discussion of this in the LOO vs WAIC vs DIC vs etc etc portions of the LOO papers.

FH: Excellent thoughts Alex. Thanks. I think this way: AIC depends on knowing the correct effective degrees of freedom to penalize for. If you have done feature selection or other data mining, AIC using the apparent d.f. doesn’t work, but LOO can still work so it is a bit more general.

Drew Levy: Ultimately, real-world decision-making is a forecast: conditional on a set of premises provided in data it is a prediction about what course of action is likely to yield the best result; especially for individual patient-level decision-making (e.g., Precision Medicine, Personalized Medicine, quality of care). Well formulated conditional probabilities (the left-hand side of the equation: l.h.s.) are high-value information about both expected and potential outcomes and uncertainties.

The RCT, for isolating the effect of a particular variable (e.g., a biomarker), has been the idol of evidence for rigorous causal inference and has led to a reductionist focus on particular independent effects. The RCT mind-set has encouraged a selective tunnel-vision focus on particular items in the right-hand side (r.h.s.) of the equation, which can distort the assessment of the real value added by new predictors. Novel sources of information should not automatically or routinely discount and displace previous information.

Models that make accurate predictions of responses for future observations by judiciously incorporating all relevant information—new and previous—for decision making perform the correct calculus of integrating information, and provide correct output for informing decisions with explicit probability and uncertainty estimates (i.e., l.h.s.). Dr. Harrell’s post illustrates optimal methods to quantify the added predictive value of new measurements as ancillary to coherent l.h.s. information and auxiliary to previous information. This supports both judicious consideration of new technology and better l.h.s.-thinking.

The exigencies of contemporary real-world health care decision-making demand much more from our data, and more thoughtful approaches for analytics from us. LHS-oriented decision-making maps observations to actions, better supports effective care related decision-making and a learning health care system. This blog post addresses a prevalent and critical opportunity for creating real value from data—and for improving outcomes for patients.

FH: Thanks very much for your thoughts Drew. You always see the bigger picture better than I.

Hi Frank,

Fabulous page. I’ve also been looking to compare two models on an external dataset that predict a continuous outcome. I don’t have the original data, to ensure appropriate blinding to the external data, I just have my two models predictions from the external data. I can fit two new lrm() models with just the model predictions as independent variables, but when I try to run a likelihood ratio test from rms::lrtest(model_1, model_2) it complains that 'models are not nested. I’ve found the package lmtest::lrtest(model_1, model_2) does give me an output, but I’m a little worried as I can’t get my head around the difference between the likelihood ratio on nested and non nested models. Is there any chance you could explain how nesting changes likelihood ratio statistics?

Although it’s OK to use the two likelihood ratio \chi^2 statistics or AIC in isolation, the models must be nested and estimated on exactly the same observations before you can get a proper test statistic that compares the models. You might compare them informally on adjusted R^2 which is derived from the \chi^2 s.

Thanks very much. I’m interested as to why it would be necessary to use adjusted R2 here though - if the models are fixed equations that have been created from a different dataset, then isn’t it penalty enough that the external data is unseen. I have been manually calculating R2 from the residuals of the predictions versus observed, rather than taking the R2 from a linear model fit through the predicted and observed external data, in a hope that that accounts better for miscalibration (because an R2 of points that were consistently predicting 10 below the observed values would still have an R2 of 1.0 in a linear model through predicted and observed values, which would be an extreme example of misrepresentation of the utility of the model). However, I’m struggling to explain that succinctly for a journal. Is there a way I could robustly compare the calibration slope or calibration intercept of the two models on external data, or the calibration intercept at clinically relevant points along the continuous outcome variable (i.e. the typically used ‘cut point’ for a pass?)

When using these methods what constitutes a nested model? Could I use these same methods to quantify the added predictive value of modelling naturally continuous predictors as continuous variables compared to dichotomised versions of the same variables? Using the same data as described in the blog post, where age65 is defined as 0 if age < 65, but 1 if age ≥ 65:

Model 1: lrm(formula = sigdz ~ age65 * sex, data = acath)

Model 2: lrm(formula = sigdz ~ rcs(age, 4) * sex, data = acath)

Model 3: lrm(formula = sigdz ~ rcs(age, 4) * sex + rcs(choleste, 4) + rcs(age, 4) %ia% rcs(choleste, 4), data = acath)

May I compare Model 2 with Model 1 using the same methods described by the blog post, inferring that keeping age as continuous and allowing it to be non-linear adds a fraction of X new diagnostic information? May I compare Model 3 to Model 1?

I’m fairly confident you can use these methods for everything you need other than P-values.

To create truly nested models for which you can do any kind of analysis you can add a discontinuity at 65. This is easy to do with this approach.

Thank you. But could you point me to a definition or resource that defines what constitutes a nested model? For some reason I have been unable to find a definition. Do models using the same base variables fit on the same cohort always constitute nested models, regardless of what transformations the variables have been put through?

Nested model = same subjects and all of the parameters in the smaller model (regression coefficients, variances, etc.) are also found in the larger model.

I want to confirm my understanding that you recommend a variance to observe model improvement (e.g. 0.057 to 0.047 after adding new biomarkers).

This is because:

For binary outcomes, variance represents the difference in probability between 0 (no incidence) and 1 (incidence). The larger the variance on the probability scale, the better because it indicates greater variability in outcomes.

Large variance means that the model is better able to distinguish different outcomes (no incidence, incidence). So the larger the variance, the better (that is, when you say “When the new markers add clinically important predictive information, the histogram widens.”).

Is my understanding correct? Thank you very much.

I currently view the variance of the predicted values as being almost as good as a log-likelihood-based measure, assuming that there is no overfitting or that all models being compared have equal amounts of overfitting (e.g., almost equal number of parameters). The variance is not corrected for overfitting. Contrast that with adjusted pseudo R^2 measures for which we have simple overfitting corrections.

Thank you very much. I may not have expressed myself very clearly, or my limited knowledge may have hindered me from fully understanding. What I find confusing is why a larger variance of predicted values is considered better. Intuitively, it seems that a smaller variance in predicted values would be preferable. In your article, you mentioned, ‘When the new markers add clinically important predictive information, the histogram widens.’ I’m not quite clear on why the histogram widens. If you could provide me with more information or clarification, that would be helpful.

In contrast to the hopefully small variance of the predicted value for a single person, we want the variance of the predictions over persons to be large. Think of the opposite: a predictive algorithm that gives the same prediction for every person. These predictions would have no predictive discrimination. This would be like a rain forecast that is a 1/5 chance of rain every day of the year.

Look at it another way: the R^2 in ordinary regression is the variance of the predicted values divided by the overall variance of Y, aside from a minor correction factor.

Thank you, Professor Harrell.

I had also considered the variance in R2 before, but the pseudo R2 in logistic / survival models is calculated using log likelihood. Therefore, I’m not too sure. However, since you express it this way, it seems that R2 is also a direction for understanding this issue.

Emphasizing predictive discrimination is a convincing reason to me. I’ll try to use your comments to explain this issue when talking to others.

Pseudo R^2 uses log-likelihood as you said. In the normal case it reduces exactly to ordinary R^2 as the log-likelihood is to within a scaling constant and a constant increment equal to the sum of squared errors. There are also generalized measures of explained variation based on the variance of predicted values.

It seems that my understanding of R2 is still not quite comprehensive. I will read more materials to gain a better understanding.

There is one more thing. I encountered trouble using the rexVar function “Relative Explained Variation” in the new version of rms. Under the cph function, it returns an error message (Error in a%*% b : non-conformable arguments.). I have also submitted this issue on GitHub. It’s possible that there’s an error in my code. If possible, could you please take a look at where the problem might be? I’ve written a simple example to reproduce the issue. My R version is 4.3.2, and I’m using the latest RStudio.

n <- 1000

set.seed(1234)

age <- rnorm(n, 50, 10)

blood.pressure <- rnorm(n, 120, 15)

cholesterol <- rnorm(n, 200, 25)

time <- runif(n, 1, 15)

status <- round(age/100,0)

d <- data.table(age,blood.pressure,cholesterol,time,status)

model_M1 <- cph(

Surv(time, status) ~ rcs(age, 3) * rcs(blood.pressure, 3),

x = TRUE,

y = TRUE,

data = d

)

g <- bootcov(model_M1, B=20)

rexVar(g, data=d)

I apologize for posing too many questions…

Sorry for the bug. It’s fixed now.