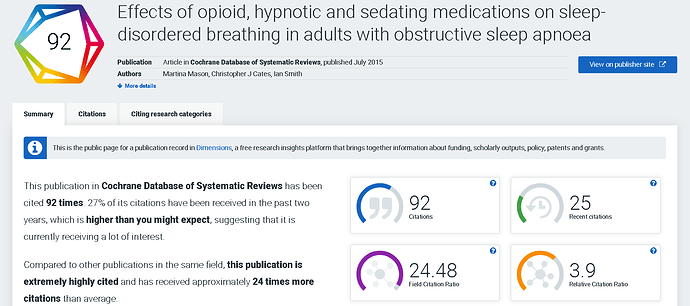

Yikes! Mortifying to think that there might be physicians who applied the findings from the linked Cochrane review to their prescribing practices for patients with sleep apnea…

Yes the unfortunate consequence of 1980s “Pathological Consensus”, the research using the guessed metric may be used for high risk medical decision making. It is very likely that many physicians made decisions based on this as it has been highly cited.

Furthermore The Cochrane Collaborative is considered a gate keeper. They are and should be highly respected. They did not know, neither did the authors or the statisticians. This is a tragedy on a grand scale.

This is why I have called for a recall of the the use of Pathological Consensus based metrics for clinical trials. They may be useful for clinical use but they were guessed and have no place as valid measurements in clinical trials where there use can lead to wrongful conclusions and be harmful to risk defining decision making . The recall should be promulgated by the leadership soon.

Finally, a notation of the fact that the AHI and ODI were used for severity indexing in this study and this is not a valid surrogate for Sleep Apnea severity (especially as it relates to opiates) should be provided promptly as this article is still being highly cited, it has been included in at least one guideline, and is (apparently) still considered reliable evidence.

Quoting the conclusion of the article above, specifically relating to opioid, hypnotic, and sedating medication use in OSA.

“The findings of this review show that currently no evidence suggests that the pharmacological compounds assessed have a deleterious effect on the severity of OSA as measured by change in AHI or ODI”.

Since the AHI is the standard measure of OSA severity this is a powerful statement.

Most here at this forum are outlier expert statisticians, mathematicians and clinicians. Most are here because we love science and math. There are no citations which are going to generate CV expansion.

However, this paper is a reminder that we are all at the bedside. What we do or do not do effects decision making and patient care. We see a methodological mistake like this and we want to turn away. We don’t want to get involved. Its like seeing bad care at the hospital, healthcare workers want to turn away. We should not… It is a responsibility we bear as healthcare scientists. We have a great purpose, . Let us embrace it without undo deference to expediency.

Thanks to all and especially Dr. Harrell for this wonderful, scientifically rich, forum…

.

To deny the negative influence of opioids and sedatives on the patency of the upper airway is to deny the years of solid, real world, pathophysiologic evidence (aka ‘common sense’) that supports the notion that these commonly used drugs worsen SDB and may do more harm than good. (see refs below).

But the saving grace of the above statement may be the ending…"… as measured by change in AHI or ODI”. The AHI has unjustly assumed the perch atop as the gold standard for diagnosis of OSA, and the bold untruth of the Cochrane conclusion statement exemplies the danger of unchecked ‘pathological consensus’. So the qualifier at the end of the statement may actually be it’s saving grace.

Cochrane Reports strive to be the final word on safety and efficacy evidence.

My favorite from Cochrane is ref (4). “…studies of perioperative monitoring with a pulse oximeter were not able to show an improvement in various outcomes.” Im delighted the bean counters havent snatched pulse oximeters from our hands because they dont offer benefit. I shudder to think what would happen.

Lets try another: “Tom Brady is not deserving of his title as the G.O.A.T., as measured by grimaces in the faces of the fans in the stands”. (away games only)

I’m enjoying the discourse.

FJO

- Overdyk, Frank J., et al. “Association of opioids and sedatives with increased risk of in-hospital cardiopulmonary arrest from an administrative database.” PloS one 11.2 (2016): e0150214.

- Overdyk, Frank J., and David R. Hillman. “Opioid modeling of central respiratory drive must take upper airway obstruction into account.” The Journal of the American Society of Anesthesiologists 114.1 (2011): 219-220.

- Izrailtyan, Igor, et al. “Risk factors for cardiopulmonary and respiratory arrest in medical and surgical hospital patients on opioid analgesics and sedatives.” PloS one 13.3 (2018): e0194553.

- Pedersen, Tom, Ann M. Møller, and Bente D. Pedersen. “Pulse oximetry for perioperative monitoring: systematic review of randomized, controlled trials.” Anesthesia & Analgesia 96.2 (2003): 426-431.

This December 17th article extends the conversation above by presenting the consequences of Pathological Consensus during the pandemic. It is likely that many patients died during the pandemic due to adherence to False Evidenced Based Medicine. (FEBM). False EBM is EBM based on RCT performed using fake (guessed) measurements. Here we see again that we are all at the bedside and that patient harm can be the consequence of the performance of RCT using fake consensus measurements… The action is not benign. .ARDS: hidden perils of an overburdened diagnosis | Critical Care | Full Text

Here it’s interesting to see the focused consequences of the broad systemwide failure of the platform approach of pathological consensus. Dr. Tobin’s article exposes one dimension, the adverse bedside care of “ARDS” driven by those wishing to standardize criteria for RCT within pathological consensus, the present failed domain of critical care syndrome science.

Of course, Dr. Tobin is only lamenting in the vertical space and as the thread above shows the problem is much broader than that. Agonizing the vertical, even with the eloquence of Dr. Tobin, renders little more than the appearance of alternative thought making the science appear more robust. It does not disturb the root cause, the domain itself.

Now, after the pandemic, the enlightened mind can see readily perceive the broad counter instances the trialists still discount as isolated anomalies. We know they do because they have introduced a new synthetic syndrome they call “NON COVID ARDS”. They are allowed to simply create new synthetic syndromes without discovery of its measurements within the domain of pathological consensus.

In other words, since severe COVID pneumonia met the guessed Berlin criteria for ARDS but did not do well under its protocolized treatment defined by RCT for ARDS they will now simply exclude COVID by defining two new synthetic syndromes “COVID ARDS” and “NON COVID ARDS”. Other domains require discovery of new syndromes, not so the domain of pathological consensus. In that domain leaders are free to simply pivot on a whim in preparation for the next RCT and protocol set.

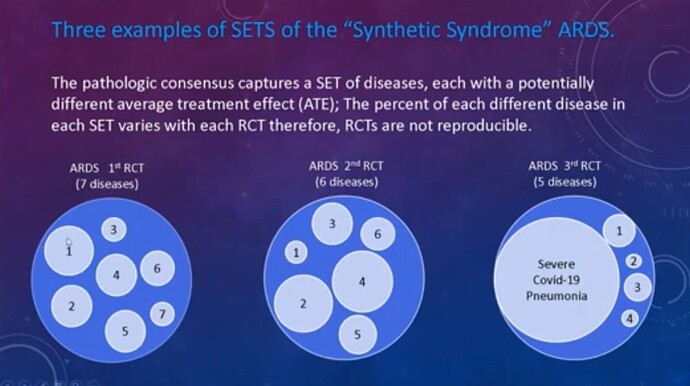

I have shown that that pathological consensus is the domain in which critical care syndrome science operates and that the variable SETS of different diseases with disparate average treatment effects captured by guessed measurements (criteria) of the the pathological consensus causes non reproducibility of the RCT… Here is an image which shows the problem which they now seek to solve by administrative action (excluding COVID rather than reconsideration of the failed dogma).

Pathological consensus replaces the more difficult task of discovery, They guess, rather than discover, the measurements allowing RCT to proceed. At the beside the protocols replace the more difficult patient focused care allowing beside care to be easily performed without prolonged bedside attendance of the academic and this also allows easy pseudo quality measurements as a function of compliance with the surrogates derived from the domain.

It should now be obvious to all that pathological consensus is a learned (1980s) top down administrative technique it is not science and renders only pathological science. In this domain the leaders are free to adjust the top whenever needed, as if one can simply guess a new orbit for mars.

The new variables of the pandemic exposed the pathological science of ARDS as this simplistic technique built from the top down and grounded in invalid ephemeral assumptions. ARDS science is simply built on the pathological consensus domain. The same domain upon which sepsis and sleep apnea were built so all are freely amendable. This is not a benign construct. Rather it is a dangerous fundamental standardized error in methodology which leads to false EBM and patient harm.

Now is the time to put forward a set of guidelines defining how clinicians will interpret the work of trialists. And statisticians who perform RCT under Pathological Consensus. In NASA, astronauts were following the rules of the scientists when an lethal fire (the pandemic equivalent) changed everything. The astronauts were scientists themselves, well trained in physics and more importantly in the live expression of it. They set up their own rules and announced they would not consume the work of the scientists unless certain guidelines to assure quality of the science (safety) were met. . This is what is required now in critical care.

In realty the free thinking physiology and pathophysiology trained clinician, is, ironically the more academic of the players given that the trialists and their acolytes cling to simplistic thinking of the failed dogma. The math they present seems so empowering but without solid measurements the math is an embellishment, a slight of hand of the trialists luring in the math enamored masses despite repeated decadal cycles of failure and top down amendments to suit the output like “NON COVID ARDS” .

So what might clinicians set as rules defining the minimum standards for research they are willing to consume? Here are a few starters.

-

Measurements (e.g. threshold criteria) for RCT cannot be guessed.

-

SETS comprised of many different diseases with potentially disparate average treatment effects cannot be combined as a syndrome for RCT just because they meet a guessed set of criteria and have similar clinical presentations.

-

All present standard consensus derived measurements (criteria) must be investigated to determine if they were guessed and to define the scientific rigor of their derivation.

-

Since RCT performed using Pathological Consensus (guessed measurements) are not just wasteful, they lead to false evidence based medicine and are therefore dangerous to the health of the public. Discovery first, RCT second, No guessing.

If you haven’t watched the 20min talk describing the unbelievable, tragic history of fake measurements, false RCT, false EBM, and Pathological Consensus don’t miss it. It will remind you that even after the lessons of Galileo, science still operates as a collective which often mandates failed dogma and this is potentially dangerous for our patients, our families when they become sick, and to us all. …

The methods of PathologicalConsensus are simple… The consensus group is free to update and change the measurements for RCT…

Don’t miss the sister discussion of a new update at

Changing SOFA would presumably change the criteria for RCT of the “Synthetic Syndrome” of sepsis which is based on the old 1996 SOFA. .Here you see how variable the synthetic syndrome actually is and why the RCT are not reproducible.

Not only does the guessed set of thresholds capture a variable mix of diseases but the guessed set itself also changes at the will of the consensus group…

RCT reproducibility using guesses (especially freely changeable guesses) as measurements should not be expected…

Very exciting developments.

After 35+ years of refusal to budge the thought leaders related to the sleep apnea hypopnea syndrome (SAHS) have decided to convene discussion about the need for new measurements for severity in sleep apnea.

It is likely that this thread (11K views) and sister thread What is a fake measurement tool and how are they used in RCT - #46 by llynn (with over 13K views) had much to do with this welcome nascent acquiescence. Thanks to everyone that forwarded the links to these threads.

The power of social media is enhanced by the fact that most researchers want to do the right thing, they are simply blinded by dogma and the drive to get grants by conforming to the prevailing thought. They are first trained within, and then contribute to, generational cycles of indoctrination.

This is why relentless pressure for introspection and reform from social media can work. Most want to get it right, they just have to be convinced of the need to open their intellectual horizon…

Sign up to join today. This is an opportunity for statisticians to see how measurements for RCT are derived near the end of the 1970s -2020s era of #PathologicalConsensus

January 27, 2023 (Friday) at 12noon EST/18:00 CET @ATSSRN @EuroRespSoc

https://t.co/ZCDiVqta10

The Smoking Gun

Proving the Need for Guidelines for Development of RCT Measurements

Up until now I did not have proof that RCT measurements were simply guessed and I’m sure many of you did not believe me. It is hard to believe that 3 decades of Sleep apnea, ARDS, and sepsis as well as other critical care RCT use guessed (not discovered) threshold sets as measurements for RCT and OBs trials. Recall that in my video I presented the Apnea Hypopnea Index (AHI) as the prototypic pathological consensus as it is a set of thresholds guessed in the 1980s as the standard (indeed mandated) measurement for sleep apnea research.

Here in the ATS and European Respiratory Society Seminar is “The Smoking Gun”

Watch at least these about 5 minutes Scroll to 2200 & watch at least to 2730.

http://ow.ly/pbaT50MJK66

I contend that guidelines are necessary now because standard and mandated RCT measurements are often guessed (without discovery) and that this started in the 1980s when trialists decided they could implement their own guessed threshold sets as measurements and thereby move directly to perform RCT, effectively bypassing the pivotal discovery phase of science. This I call "Pathological Consensus".

I appreciate the courageous candor those in the seminar display. Recall that I mentioned the Chicago consensus meeting discussed in my video… Only statisticians will be able to help them by setting up guidelines for RCT measurements because most trialists in the fields of sleep apnea and critical care were indoctrinated in this 1970-1980s methodology just like I was until the 1990s.

It is only about 5 minutes so please watch the section of the seminar from 2200 - 2730

Now that you have seen “the smoking gun”… Please. Help.

Its up to us. There is no backup…

I began the campaign teaching about the pitfall of deriving “fake RCT measurements” for the performance of RCT in the study of synthetic syndromes before the pandemic

What is a fake measurement tool and how are they used in RCT.

It is amazing that in the middle of these teachings, the emergence of the pandemic provided a natural social experiment to show how critical care researchers would respond to a clear counter-instance to RCT applied to synthetic syndrome science.

Acute Respiratory Distress Syndrome (ARDS) is one of those “synthetic syndromes”. ARDS was defined nearly 50 years ago by a pulmonologist named Tom Petty. Editorial: The adult respiratory distress syndrome (confessions of a "lumper") - PubMed

Dr. Petty was a confessed lumper and he included severe viral pneumonia in the ARDS definition he made up. Unbelievably, nearly 50 years later, Dr. Petty’s decision to include severe viral pneumonia in ARDS would have severe impact on perceived EBM care for COVID pneumonia in 2020 and provides the basis for us to see how synthetic syndrome scientists think when faced with a disease (COVID pneumonia) that never existed before Dr. Petty’s guess but meets the criteria for the syndrome made up by Dr. Petty nearly 50 years earlier.

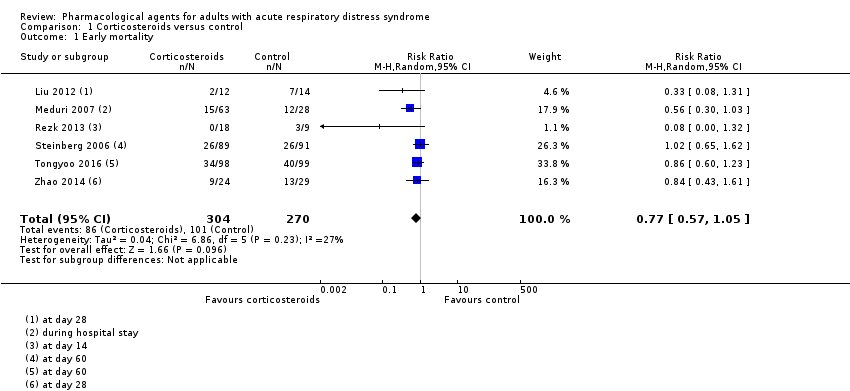

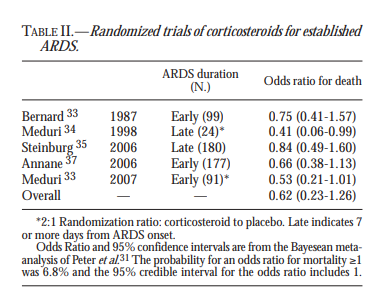

So here you see them over 30 years studying corticosteroid treatment of Tom Petty’s ARDS.

https://www.minervamedica.it/en/freedownload.php?cod=R02Y2010N06A0441

Then about 12 years later in 2019 Cochrane Review provides a metanalysis examining the mortality endpoint associated with Corticosteroids in ARDS. One year before the pandemic) Cochrane review states: "We found insufficient evidence to determine with certainty whether corticosteroids… were effective at reducing mortality in people with ARDS.…" This RCT evidence caused perceived “evidence based” opposition to corticosteroid treatment of “ARDS” due to severe COVID-19 pneumonia (remember severe COVID pneumonia was called ARDS because Tom Petty included severe viral pneumonia in the ARDS definition he made up in the 1970s)**.

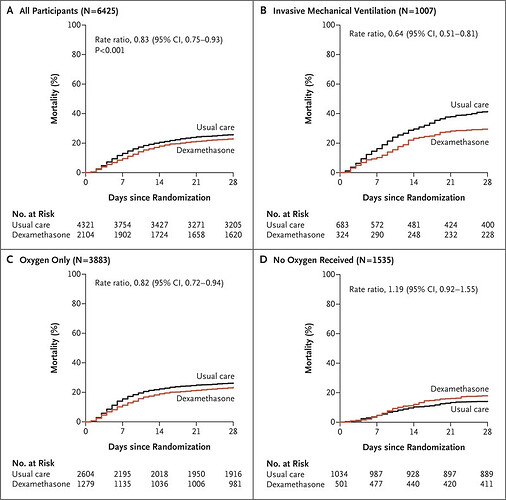

In contrast to the 35 years of “ARDS” RCT, this is the study of Corticosteroids in severe COVID Pneumonia which never-the-less meets the 1970s definition and the 2012 criteria for ARDS.

Now those clinging to the RCT and metanalysis for ARDS (the Tom Petty synthetic syndrome) were totally confused.

So this is what has emerged for this social experiment…The splitting the ARDS synthetic syndrome into a dichotomy

But why a dichotomy? Why should we believe that NON COVID ARDS is a syndrome? If we had a pandemic of pancreatitis would we be splitting ARDS secondary to pancreatitis based on its effect on the results of a metanalysis wherein so called pancreatitis associated ARDS dominated. .

It is interesting to watch and read the effects of the cognitive flagellations as the application of RCT to Tom Petty’s 1970s guessed synthetic syndrome is exposed as non-science by the emergent pandemic . But couldn’t we see it was non-science all along? We bundled viral pneumonia induced pulmonary dysfunction due to a novel virus in 2020 with pulmonary disfunction associated with trauma for an RCT because one pulmonologist thought that was a good idea in 1976?

Worse though is the emergence of the new synthetic syndrome for RCT “Non COVID ARDS”. This shows the lesson of the pandemic was not learned. RCT are not applicable to guessed cognitive buckets of diseases because the bucket mix changes all the time. Sometimes that change is due to a new virus. Scientists must learn the lesson the new virus has taught about the futility of guessing syndromes and making up measurements for RCT.

Now think again about this process:

- Prior to1975 Tom Petty guesses a syndrome “ARDS” and includes severe viral pneumonia.

- 1987 RCTs begin investigating corticosteroids in the treatment of Tom Petty’s guessed syndrome…

- Over 3 decades the results of the RCT for “ARDS” are mixed but largely weak or negative.

- COVID Pneumonia emerges as the greatest acute killer since WW2.

- Academics decide that since COVID pneumonia meets Tom Petty’s 1970s definition (as amended) and corticosteroids have failed in RCT these drugs should not be used in severe COVID pneumonia because RCT of Tom Pettys ARDS have been negative.

- An RCT is performed only on severe COVID pneumonia and it shows efficacy.

This RCT of the synthetic syndrome “obstructive sleep apnea” has it all.

- Use of two guessed measurements for endpoints (the AHI and ODI)

- The guessed measurements are both comprised of guessed thresholds from the 1980s,

- Selection of two arbitrary (guessed) threshold changes from baseline for endpoints. (50% & 25%)

- Selection of an arbitrary threshold as an endpoint. (AHI of 20, which previously was considered moderate severity but for this RCT is selected as an endpoint target).

“Responder rates were used to evaluate efficacy end points, with responders defined by a 50% or greater reduction in AHI to 20 or fewer events per hour and a 25% or greater reduction in oxygen desaturation index (ODI) from baseline.”

Remember sleep apnea is the prototypic synthetic syndrome of those discussed in this thread. Hypoglossal nerve stimulation with an implanted device is presently marketed as an optional treatment for Obstructive sleep apnea.

Remember in the the Seminar of ATS and ERS the AHI was identified as non reproducible and poorly correlated with morbidity

If you missed it earlier here in the ATS and European Respiratory Society Seminar they discuss the AHI

Watch at least these about 5 minutes Scroll to 22:00 & watch at least to 27:30.

http://ow.ly/pbaT50MJK66

As I have pointed out in the past, in “synthetic syndrome science”, since the measurements for the RCT endpoints are arbitrary and comprised of guessed thresholds, the researcher is free to pick any arbitrary and guessed threshold value for an endpoint or any threshold change from baseline for an endpoint and in fact here they guess two different arbitrary changes from baseline for two different guessed measurements for the RCT.

If you have followed this thread please join in the discussion at the new linked thread which discusses Syndrome Science based research of ARDS, which was perceived as relating to the critical care management of COVID pneumonia.

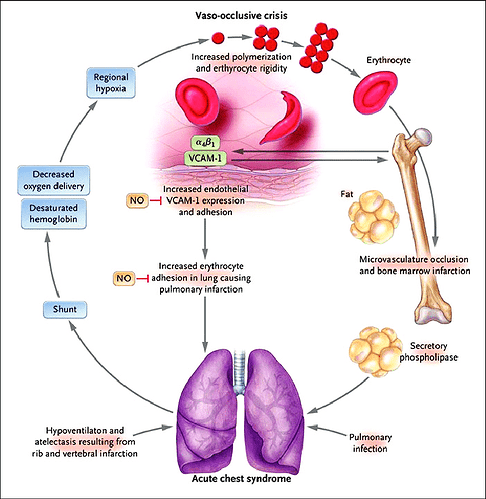

I was wondering whether acute chest might be another example of this lumping together of disparate entities into a syndrome. It seems acute chest syndrome can occur due to fat embolus or atelectasis secondary to vasoocclusive pain or due to infection or asthma, which seem to be pretty different causes.

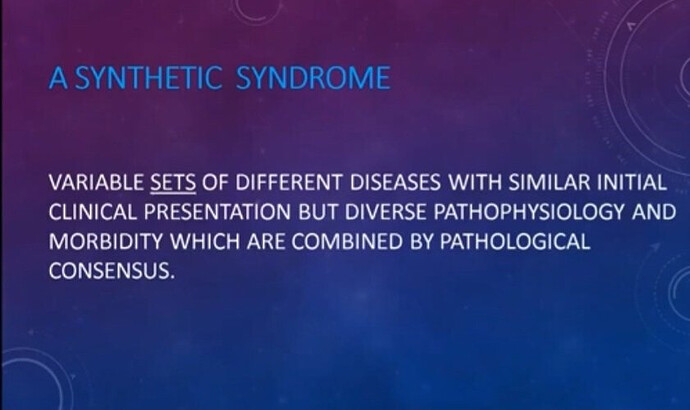

This is an excellent question because it brings into focus the relationship of clinical syndromes and the group I will call “Synthetic Syndromes”.

Synthetic syndromes are legacy syndromes guessed in the 60s and 70s which are comprised of variable sets of diseases having diverse pathophysiology but which are combined by pathological consensus. These include ARDS, Sepsis and Sleep apnea. They exists today as synthetic syndromes because they clinically present in a strikingly similar way and one or more physcians in the remote past lumped them thinking they had a common pathophysiologic basis. Over many decades the synthetic syndrome became the platform dogma for funding, research and protocolization.

Specifically, synthetic syndromes lack a common fundamental (apical) pathophysiologic basis.

In contrast, Acute Chest Syndrome (ACS) is a clinical syndrome which potentially comprises a set pathologies caused by sickle cell disease (SCD). Here the syndrome (as a cognitive entity) is really a memory or teaching support tool useful to herald the need for testing and intervention responsive to the common cause (which is SCD) and one or more associated triggers such as infection.

Clinical syndromes like ACS are highly useful. Object thinking and teaching is highly efficient and effective so lumping the different manifestations into a syndrome is effective for teaching, memory and awarness. Here there is a common pathophysiologic basis. The measurements defining a clinical syndrome may rise to the statistical level for the study of treatment targeting the apical or a substantial component of the downstream pathophysiology.

However, a methodological error occurs when trialists and statiticians assume guesed measurements defining synthetic syndromes (things that look similar but lack a common in pathophysiologic basis) like ARDS rise to the statistical level. There may be some value in teaching a given synthetic syndrome but it is cognitively hazadous to think of a synthetic syndrome as an object for a common protocol or for RCT.

This is the pivotal point that trialists must understand. Clinical syndromes with a common pathophysiologic basis are objects for which measurements may rise to the statistical level.

In contrast, legacy syndromes which, after 40-50years, have been found to lack a common pathophysiologic basis and which are comprised of a set of disparate diseases are “synthetic syndromes”. They look like a single object. Guessed measurements of synthetic syndromes do not rise to the statistical level. The mix of diseases comprising the set of diseases captured by the guessed measurements will change with each RCT and since they lack a common pathophysiologic basis this generates nonreproducibilty.

If, in the future, a common pathophysiologic basis for one or more of these synthetic syndromes and/or reproducible signals responsive to a common pathophysiology is(are) discovered, then measurements derived from that discovery will likely rise to the statistical level.

Alternatively the synthetic syndrome may be divided into subsets but only wherein a common pathophysiologic basis or fundamental specific signal for each such subset is discovered (as is true for some types of sleep apnea).

Most importantly your question brings the discussion of the difference between a “clinical syndrome” and a “synthetic syndrome”. That’s a pivotal point for all to consider.

Our new book on Amazon shows the history of RCT in critical care Somehow I cannot log in under my name

This book reviews the critical care research history from 1963 to present and shows the deviation critical care made from sound RCT science. Also reviewes sleep apnea severity science.

The book explains the mistakes in detail showing that the present state is artificial and unsustainable.

Explains decades of failure and the failure of accepted RCT ventilator science during COViD

Book is free to anyone sending request.

The Physician’s War: The Story of the Hidden Battle between Physicians and a Science Based on Pathological Consensus

From the book introduction showing how the RCT was corrupted inadvertently.

“The machine the rebels oppose is a massive infrastructure, its main tool is a science based on “Pathological Consensus”, a dangerous but seductive pathologic version of science, which masquerades as real science. Pathological Consensus emerged, as if out of the ether, in the 1960s. Its advocates soon dominated the landscape offering easy research and the promise of a simple path to the discovery of a one-size-fits-all protocols for large groups of diseases which they lumped together as Synthetic Syndromes.

In the 1960s, these machine creators had visions of massive breakthrough cures for large groupings of similar appearing diseases. No one knew it but the machine’s creators had subtly hijacked and corrupted the breakthrough method of the randomized controlled trial (RCT) which had been introduced, two decades earlier, by Austin Hall based on the work of the polymath genius Ronald Fischer. The creators inserted two new techniques into the mathematical DNA of the RCT method, so that a single trial could be used to test a single treatment for a large group of different, but similar appearing, diseases simultaneously

These two techniques were like hidden viruses destroying the normal function of the randomized controlled trial but opening the performance of these trials up to the masses of brilliant academics eager to perform them.

Hi Lawrence

Congrats on the book!

The key message I took from it: Progress in any scientific field is like building a Jenga tower. If you put rotten blocks at the bottom, you’ll never get it off the ground.

True experts take the time to critically interrogate the foundational work in their field. Researchers who ignore this history risk spending their careers building towers that collapse whenever a second storey is added.

Stagnancy in a field can signal that the problems at hand are wickedly complex. But it can also signal that leaders have ignored a rotten foundation.

Closely related to this is a presentation from @Sander from last year:

There is not much “Science” in “Science”.

@Lawrence_Lynn: have you considered that your arguments could be applied to many areas of medical research? An accounting of actual medical fact vs pathological consensus, is overdue.

The world is run by people who gave faith that they know exactly what is going on”

“Illusions of skill are supported by a powerful professional culture”

People can sustain an unshakable level of faith in any proposition however absurd when they are sustained by a community of like minded individuals”

Near quotes from his talk. Excellent.

To which I would add that the The embellishment afforded by statistics derived from pathological consensus provides an impenetrable bubble. That’s why going deep and finding the apical errors in the spplication of Hills method is pivotal.

Could not have said that better. That’s why Wood and others operated as science police. There is no backup. Loss of science police and consequences for getting it wrong produces the present state in critical care.

No science police (self policing) results in a sustained pathological science which becomes pathological consensus, But who are the science police when everyone is a community seeking the favor of each other?

The question is how do you get the young to be science police. I was taught at Washington Univerity Stl by a member of the “ Antidogmatic Society”. I think the culture has changed to the “Hiw do I get a grant society”. There are no science police in that culture.