Thank you for inviting us to participate in the Data Methods discussion on “individual response”. Judea and I have written the text below. It consists of 3 parts: (1) A more detailed description of the example presented in our blog, (2) An explanation why we believe it brings personalized medicine closer to reality and (3) A discussion of the practical issues raised by @Stephen.

Before we start, let us reiterate the conceptual outline of our theme. We are interested in estimating an individual response to a given treatment, namely, how an individual would react if given treatment and if denied treatment, and we have at our disposal population data, namely, how various subpopulations behave, on average, under treatment and control, including behavior under non-experimental conditions. We are asking: to what degree can population data inform us about an individual response.

The fact that ordinary RCTs, even conducted under ideal conditions, cannot provide sufficient information about an individual response can be seen from the following simple example:

Preliminary Example

Suppose we find no difference between treatment and control groups. For example, assuming our treatment is a drug and our outcome is survival or death, we find that 10% in both treatment and control groups die, while the rest (90%) survive. This makes us conclude that the drug is ineffective, but also leaves us uncertain between (at least) two competing models:

Model-1 – The drug has no effect whatsoever on any individual and

Model-2 – The drug saves 10% of the population and kills another 10%.

From a policy maker viewpoint the two models may be deemed equivalent, the drug has zero average effect on the target population. But from an individual viewpoint the two models differ substantially in the sets of risks and opportunities they offer. Model-1 is useless but safe. Model-2, however, may be deemed dangerous by some and a life-saver by others.

Assume, for the sake of argument, that the drug also provides temporary pain relief. The drug of Model-1 would be deemed desirable and safe by all, whereas the drug of Model-2 will scare away those who do not urgently need the pain relief and aren’t comfortable with the knowledge that there’s a chance (10%) of the drug killing them, while offering a glimpse of hope to those whose suffering has become unbearable, and who would be ready to risk death for the chance (10%) of recovery. (Hoping, of course, they are among the lucky beneficiaries.)

This simple example allows us to illustrate the main theme of our blog post: Supplementing the RCT with an observational study on the same population (conducted, for example, by an independent survey of patients who have the option of taking or avoiding the drug) can help us decide between the two models, thus shedding light on individual behavior, and allowing us to reach conclusions we could not have reached on the basis of the RCT study alone. Indeed, consider an extreme case where the observational study shows 100% survival for all option-having patients, as if each patient knew in advance where danger lies and managed to avoid it. Assume further that a non-zero fraction of patients in the RCT control arm die. Such a finding, though extreme and unlikely, immediately rules out Model-1 which claims no treatment effect on any individual. Such a model could not explain why surveyed people, similar to those who die under control, would survive upon choosing to avoid the drug. Using the RCT study alone, in contrast, we were unable to rule out Model-1, or even to distinguish Model-1 from Model-2.

Now, that we have demonstrated conceptually how certain combinations of observational and experimental data can provide information on individual behavior that each study alone cannot, we are ready to go to the example in our blog post which, based on theoretical bounds derived in (Tian and Pearl, 2001), establishes individual behavior for any combination of observational and experimental data and, moreover, demonstrates critical decision making ramifications of the information obtained.

Part 1

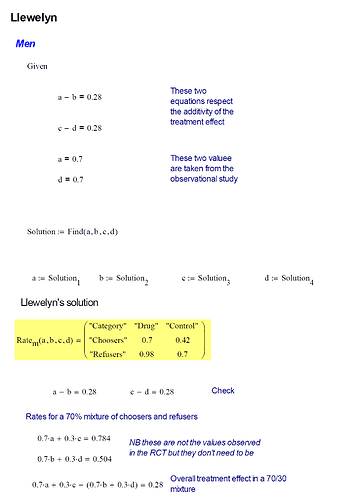

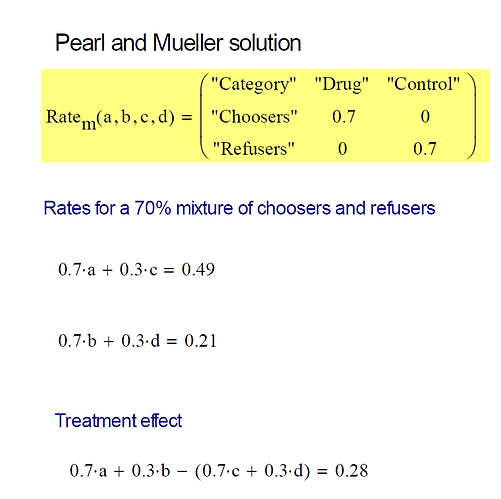

Our example describes three studies, two experimental and one observational. Let’s focus on the second RCT, since the first was used for drug approval only, and its findings are the same as the second. The RCT tells us that there was a 28% improvement, on average, in taking the drug compared to not taking the drug. This was the case among both females and males which we can express as:

CACE(male) = CACE(female) = 0.28,

where CACE stands for Conditional (or Gender-Specific) Average Causal Effect, defined in Eq. (2). The table below provides survival and recovery data under both experimental and observational settings for women and men. Let us denote y

t as recovery among the RCT treatment group and y

c as recovery among the RCT control group. The causal effects for treatment and control groups, P(y

t|Gender) and P(y

c|Gender), were also the same

1, so no differences were noted between males and females.

| Female |

Treatment |

Control |

Choose |

|

|

do(Drug) |

do(No Drug) |

Drug |

No Drug |

| Survivals |

489 (49%) |

210 (21%) |

378 (27%) |

420 (70%) |

| Deaths |

511 (51%) |

790 (79%) |

1,022 (73%) |

180 (30%) |

|

1,000 |

1,000 |

1,400 |

600 |

| Male |

Treatment |

Control |

Choose |

|

|

do(Drug) |

do(No Drug) |

Drug |

No Drug |

| Survivals |

490 (49%) |

210 (21%) |

980 (70%) |

420 (70%) |

| Deaths |

510 (51%) |

790 (79%) |

420 (30%) |

180 (30%) |

|

1,000 |

1,000 |

1,400 |

600 |

We are treating this as an ideal RCT, with 100% compliance and no selection bias or any other biases that can often plague RCTs. We choose to analyze an idealized RCT in order to better conceptualize how an observational study can provide insight for better decision making. Imperfections will be discussed in Section 3 of this post once the conceptual underpinnings are solidified.

In addition to the above RCT, we posited an observational study (survey) conducted on the same population. For the sake of demonstration, we can imagine that the drug was offered at retail stores without a prescription and consumers were freely able to choose whether to take the drug or not. Since the RCT showed a 28% improvement for both men and women, a public recommendation would naturally be issued for everyone suffering from this illness to use this drug for its remedial effects. In the observational study, however, it was found that 70% of men and 70% of women chose to take the drug, while 30% of both men and women avoided it, possibly deterred by side effects or rumors of unexpected deaths. See Table 1.

With this in mind, the observational study could also be considered an RCT with 100% of participants in the trial, 0% of participants receiving a placebo, and 70% compliance among both men and women, as was suggested by @Stephen. However, we prefer not to think of observational studies in that way, since the literature on non-compliance does not provide us with the tools to combine information from two different studies and leverage it to predict individual behavior. Such tools are provided by the theory of Structural Causal Models (Causality 2009) which led to the bounds of (Tian and Pearl, 2001) and in which “non-compliance” is not a variable, but a manifestation of some unobserved factors, such as “side effects” of “rumors of unexpected death”.

An important assumption underlying our analysis is that of “exchangeability”, also known as “consistency” (Pearl, 2010). In other words, we assume that the units selected for the observational study represent the same target population and that their response to treatment is purely biological, unaffected by their respective settings. Using our notation, consistency implies2:

Y_x = Y \text{ whenever } X = x \text{ and } Y_{x'} = Y \text{ whenever } X = x'

In other words, the outcome of a person using a drug is the same regardless of whether that person took the drug by free choice or by virtue of being assigned to the treatment group in a RCT. Similarly, if we observe someone avoiding the drug, their outcome is the same as if they were in the control group of our RCT. In assuming consistency, we are treating this as an ideal observational study so we can conceptualize the vital insights gained for decision making. Deviation from consistency, normally attributed to uncontrolled “placebo effects”, should be dealt with by explicitly representing such factors in the model.

Even though men and women reacted the same according to the RCT, we see in Table 1 that their results are different in the observational study. Among the men and women who avoided the drug, the results were the same, 30% of them died, while 70% of them recovered. However, the results differed among the drug-takers (consisting of 70% of men and 70% of women). Here, 70% of the drug-taking men and only 27% of the drug-taking women recovered. This may seem surprising, since everything else up until this point was the same between men and women. This scenario might seem implausible, but the underlying numbers are realistic and this is a completely possible situation. It is quite plausible that women, unlike men, had a reason (say a side effect) to avoid the drug and chose to take it only when they were at a more advanced stage of the disease.

Remarkably, this observational result of only 27% recovery among drug-choosing women versus 70% recovery among drug-avoiding women, yields crucial decision making information unavailable from the RCT alone. We can now be sure that women, on average, face precisely a 28% chance of benefiting from the drug, and no danger of being harmed by it3. This means that 28% of women will recover with the drug and not recover without the drug, and no woman is in the situation of recovering if and only if she does not take the drug. Naively, we would expect this result given the CACE(female) = 0.28 result from the RCT, if we assume that no woman can be harmed by the drug. This assumption turned out to be correct for women, but not for men. 49% of men will benefit from the drug, which makes men seem to fare better from the drug. But this is balanced by the fact that 21% of men will be harmed by the drug. This means that 21% of men will not recover with the drug and will recover without the drug. This changes the scenario for men as explained in the blog article.

Part 2

The ramifications of these findings on personal decision making are enormous. First, they tell us that the drug is not as safe as the RCT would have us believe, it may cause death in a sizable fraction of patients. Second, they tell us that a woman is totally clear of such dangers, and should have no hesitation to take the drug, unlike a man, who faces a decision; a 21% chance of being harmed by the drug is cause for concern. Physicians, likewise, should be aware of the risks involved before recommending the drug to a man. Third, the data tell policy makers what the overall societal benefit would be if the drug is administered to women only; 28% of the drug-takers would survive who would die otherwise.

.

Fourth, we can go further and examine what we can do with the women and men who chose not to take the drug. Given that they haven’t yet taken the drug, is it worth convincing them to take it? By asking this question we assume, of course, that convincing them implies they did not recover from the illness and are still alive to benefit from being convinced. Accordingly, let us assume that non-recovery does not mean death and changing one’s mind does not affect one’s response. It turns out that if a woman chose not to take the drug and she did not recover, then it is certain that she will recover if she changes her mind and takes the drug. This is a clear action item that will benefit (30% of drug-avoiding women) × (30% of unrecovered drug-avoiding women) = 9% of women. The opposite is the case with men. If a man chose not to take the drug and he did not recover, then it is certain that he will not recover if he then takes the drug. So he should not waste his money or endure any potential side effects by consuming the drug. This probability, which is 1 for women and 0 for men, is known as the Probability of Sufficiency (PS).

Finally, knowing the relative sizes of the benefiting vs harmed subpopulations swings open the door for finding the mechanisms responsible for the differences as well as identifying measurable markers that characterize those subpopulations. For example, women above a certain age may be affected differently by the drug, to be detected by how age affects the bounds on the individual response. Such characteristics can potentially be narrowed repeatedly until the drug’s efficacy can be predicted for an individual with certainty or the underlying mechanisms of the drug can be fully understood.

None of this was possible with only the RCT. Yet, remarkably, an observational study, however sloppy and uncontrolled, provides a deeper perspective on a treatment’s effectiveness. It incorporates individuals’ whims and desires that govern behavior under free-choice settings. And, since such whims and desires are often proxies for factors that also affect outcomes and treatments (i.e., confounders), we gain additional insight hidden by RCTs.

One of the least disputed mantra of causal inference is that we cannot access individual causal effects; we can observe an individual response to treatment or to no-treatment but never to both. However, our theoretical results show that we can get bounds on individual causal effects, which sometimes can be quite narrow and allow us to make accurate personalized decisions. We project therefore that these theoretical results are key for next-generation personalized decision making.

Part 3

This part promised to be a discussion of the practical issues raised by @Stephen. However, I am taking a 4 day vacation and will continue upon my return. This should also give us an opportunity to raise new issues, questions and ideas in light of the (hopefully) clearer exposition of the proposed scheme of achieving more individualized decision making capabilities.

Footnotes

1 P(y

t|female) was rounded up from 48.9% to 49%. The 0.001 difference between P(y

t|female) and P(y

t|male) wasn't necessary, but was constructed to allow for clean point estimates below.

2 Consistency is a property imposed at the individual level, often written as

Y = X \cdot Y(1) + (1-X) \cdot Y(0)

for binary X and Y. Rubin (1974) considered consistency to be an assumption in SUTVA, which defines the potential outcome (PO) framework. Pearl (2006) considered consistency to be a theorem of Structural Equation Models.

3 All derivations are available in the references provided