Huw has asked me to add a few additional thoughts on the topic of “how to best calibrate outcome probabilities”

From what I can gather in the previous comments, the majority of this discussion has been on improving calibration of various models for medical outcomes, which remains a very important topic.

However, in my experience most physicians rely on their own “internal thought process” to estimate (or gestalt) outcome probabilities, which are then used for decision making. But physicians are not always good at these sort of estimates. Our clinical experience helps us refine our ability discriminate between various predictors/risk factors to determine the most likely outcomes, but it does not (on a case by case basis) always calibrate those same estimates.

Thus, the “best” way improve this process would require calibrating physicians estimations. Ideally, models are best suited to provide some external validity or check against which we as physicians can see if our internal estimates match external ones. At the moment, our best bet is to have peer to peer discussions, and thus calibrate our judgement to a consensus. This is, however, not ideal.

A large part of physician education focuses on improving our diagnostic skills. No one wants to make a mistake. So we focus on the various ways we might error. A lot of this involves cognitive psychology and various forms of cognitive biases.

But it is equally important that our predicted outcomes have some external measure, so that from time to time physicians can also calibrate their estimates against validated models, and not just the opinion of our peers.

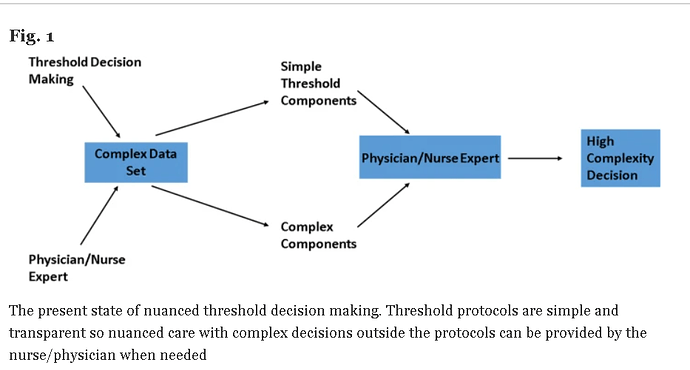

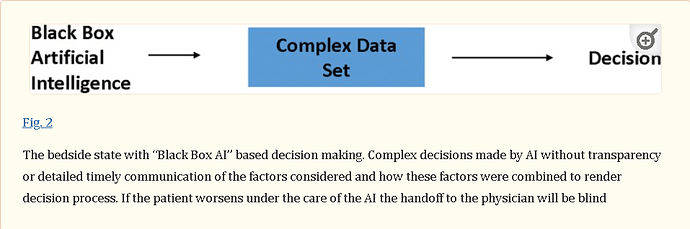

In order for this process to be acceptable, its is important that those external models be transparent, and the assumptions clearly understood. We need to ensure the physicians can have confidence against which we calibrate our internal thought process, or the limitations of any model should we have disagreement.