In talking to a patient or in making grand policy decisions, when the likely benefit of a treatment is being considered, it is wise to utilize both relative (e.g., hazard ratio) and absolute treatment effects. For the latter, two classes of effects are the life expectancy scale and absolute risk reduction (ARR). Many clinicians are taught to translate ARR to the number needed to treat to save one life or prevent one clinical event — the NNT. There are many reasons that I dislike the NNT. I thought I would take this opportunity to describe some of them. The first problem stems from trying to replace two numbers with one, i.e., instead of reporting the estimated outcome with treatment A and the estimated outcome with treatment B, the physician just reports the difference between the two.

Loses Frame of Reference: Suppose that the quantity of interest is the estimated risk of a stroke by 5y, and the estimated risks under treatments A and B are 0.02 and 0.01. Then ARR=0.01 and NNT=100. But if the two risks are 0.93 and 0.92 the ARR and NNT remain unchanged. Yet the patient will likely perceive the two settings to be quite different for the purpose of their decision making.

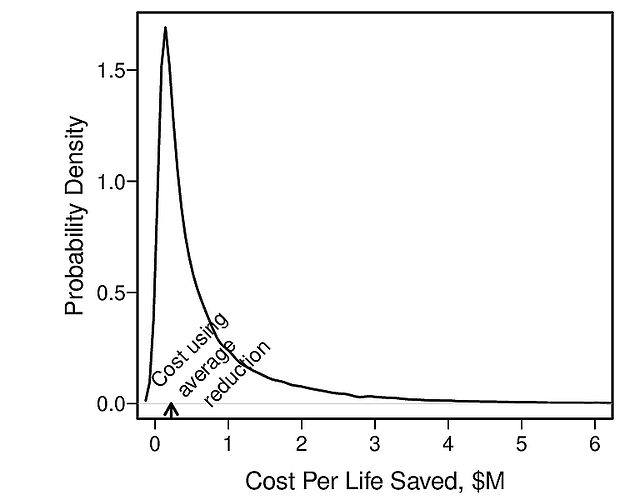

Computing NNT from Group Data and Using it on an Individual: The vast majority of NNTs are computed using group averages. For example, a physician may read a clinical trial report that provides the overall unadjusted cumulative incidence of stroke at 5y to be 0.25 and 0.20 for treatments A, B and compute NNT=1/(0.25 - 0.2) = 20. What is the interpretation of 20? It really has no interpretation. That is because RCTs with even the most restrictive inclusion criteria have a variety of subjects with much differing risks of the outcome. ARR varies strongly with baseline risk as discussed in more detail here. Thus ARR is subject-specific and so is NNT. NNT is impossible to be a constant, and if it is computed as if it were a constant, it may not apply to any real subject.

NNT Invites Physicians to Make Group Decisions: NNT as almost always computed on groups and thus apply to some sort of ‘average person’ who may not exist. An NNT of 50 is interpreted by many physicians as “I need to treat 50 patients to save one”. Who are the 50? Who is the one? Decision making in medicine is one patient at a time, and needs to use that patient’s absolute risk or life expectancy estimates.

NNT Has Great Uncertainty: How often have you seen a confidence interval for NNT? Probably not very often. And when you compute them they are often so wide as to render the NNT point estimate meaningless, even if the problems listed above didn’t bother you. For example, an ARR that comes from the two proportions 1/100 and 2/100 is 0.01 with 0.95 confidence interval of about [0, 0.044]. Confidence limits for NNT are the reciprocals of these two numbers or [23, ∞].

Recommendations: Instead of NNT, report patient-specific estimates of absolute outcome risks under treatments A and B, or two life expectancies.