Those are good points. But regarding the paper, they are discussing analysis of outcomes that are already ordinal.

I agree but the counterargument from their side is that these PRO scores are sums of ordinal scales that can be treated as interval. An underlying assumption here is that the ordinal vs interval debate in this situation is trivial. I think that treating PRO scores as interval causes more trouble than it is worth but those who advocate for linear models would beg to differ.

It would be nice to see some of the distributions, to check for absence of floor effects, ceiling effects, bimodality, heavy tails, etc. On the other hand why take a risk by still using parametric methods when the semiparametric methods are so powerful?

in the jama paper you tweeted (hydroxychloroquine RCT) it says they used simulations for power. Have you used simulations to see how floor effects, bimodality, non-PO etc affect power? And why simulations, ie is it not possible to just use these equations?: Size and power estimation for the Wilcoxon–Mann–Whitney test for ordered categorical data

I think a better place to seek that is one of the papers that studied what goes wrong when you use linear models to analyze ordinal data.

Blockquote

I agree but the counterargument from their side is that these PRO scores are sums of ordinal scales that can be treated as interval.

This is a common assumption in psychometrics, but I’ve never been able to find any rigorous mathematical argument in favor of it.

On the contrary, much like the literature on “significance” testing vs. p values as surprise measures, there is a debate within social science on whether it is permissible to treat ordinal data as interval.

Representational measurement theory would advise analysis that takes into account only the order properties when spacing cannot be verified as equal at all points of the scale.

Joel Mitchell (a mathematical psychologist) as written numerous papers on this issue and considers psychometrics a “pathological science” because of it.

Michell J. Normal Science, Pathological Science and Psychometrics. Theory & Psychology. 2000;10(5):639-667. doi:10.1177/0959354300105004

The consequences of treating these ordinal scales as interval has been explored in this paper:

After reading this, I’ve come to the unfortunate conclusion that parametric analysis of PRO measures at the individual study level makes any synthesis or meta-analysis (without access to individual data) unreliable.

If researchers would simply use ordinal methods at the individual study level, then meta-analysis could be a useful tool. The methods recommended by @f2harrell seem correct if the goal is to learn at both the individual level, and to aggregate studies via meta-analysis.

It sounds as if Medicine’s mantra of “continuous learning” needs to be brought somehow to Statistics.

Latest

The discussion is quite interesting.

they dont know what ‘multivariate’ means. Also, wondering why they would do meta-analysis, i hope that doesnt become some new standard

Individual patient meta-analysis may have a role but I’d rather label it is “efficient analysis of studies with available individual level data, accounting for study heterogeneity”.

These indeed are very interesting outputs. However, I haven’t found any recent RCT reporting such statements. Would you please have any reference?

You see several trials reporting proportions of Y >=y but not as estimated from the model. Yet.

Fingers crossed!

Referring back to this:

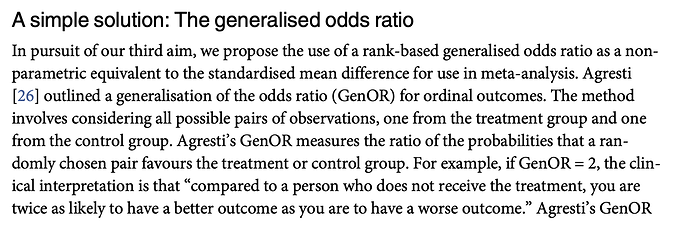

How relatable is it to Agresti’s Generalized Odds Ratio (GOR)?

Your quote sounded similar (not equal) to this explanation about GOR in another paper:

I haven’t seen you talking about GOR. Looking forward to know your opinion about it. Thanks!

The GenOR is closely related to the Mann-Whitney-Wilcoxon statistic, which is a special case of the proportional odds.

I started a different thread that discusses why proportional odds are preferable to metric models for ordinal data, despite decades of debate to the contrary.

Great resource, thanks!

Of note, the authors from the paper I mentioned before cite this another article, where they describe this concept:

“The proposed Wilcoxon-Mann-Whitney generalised odds ratio (WMW GenOR) follows the same logic as Agresti’s GenOR but does not ignore the ties; tied observations are split evenly between better and worse outcomes”

You can easily convert the regular PO model odds ratio to the concordance probability (that counts ties as 1/2) as detailed here. I hope that someone can figure out how that relates to GenOR or Agresti’s.

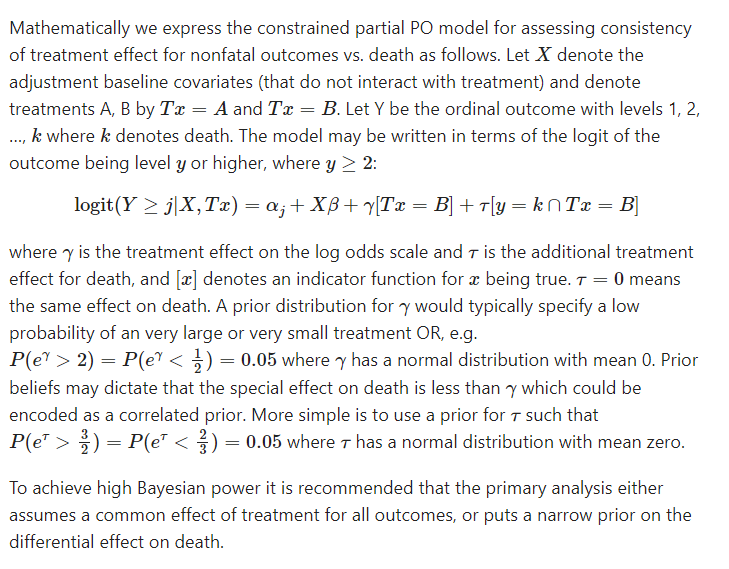

Were you referring to a Constrained Partial Proportional Odds model in this topic?

If so, would that be the posterior probability of \tau being greater/lower than 0 (whichever probability is greater) in this model?

Yes; I just wouldn’t say “whichever probability is greater” but would pre-specify the direct of interest. Or use a non-null assertion as done in the example, e.g., compute the probability that the treatment effects mortality differently by an “interesting” amount, e.g. \Pr(|\tau| > 0.2). Instead of 0.2 choose the log of the OR you care about.

This is such an interesting model. Many possible estimands. I think I finally wrapped my mind around the model.

Thanks again!