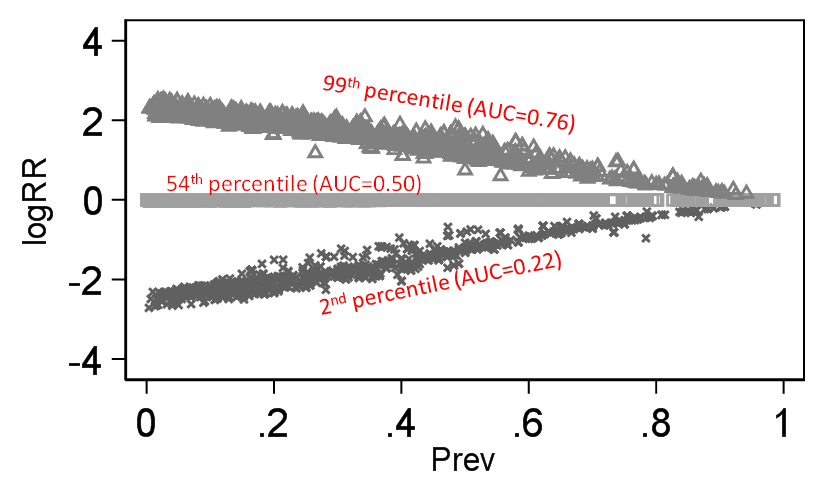

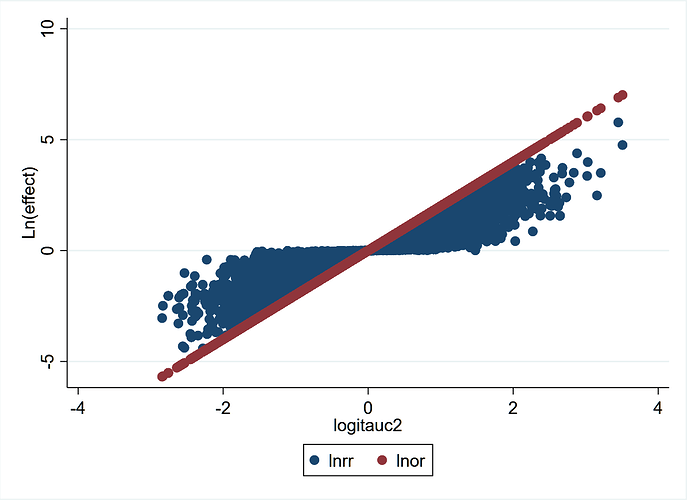

It is true that when decision making in Medicine proceeds (e.g. drug A to prevent outcome Y), clinicians make use of research results that are reported in terms of estimated probabilities. Thus, Pr [Y(1) = 1] is the risk expected under the drug treatment while Pr [Y(0) = 1] is the estimated baseline risk under the control treatment. Thus for the particular baseline risk in the study (Pr [Y(0) = 1]) there is a relative risk (RR) given by RR = (Pr [Y(1) = 1])/( Pr [Y(0) = 1]). The problem with the common practice advocated to clinicians to combine the patient-specific baseline risk with the RR to estimate a patient’s risk under treatment is that the RR varies with every baseline risk and thus the estimated risk under treatment assuming a constant RR is not really that useful[1]. As stated by Huitfeldt[2], statisticians tend to appreciate this more because RR models may lead to predictions outside the range of valid probabilities or different predictions depending on if the RR or its complement (cRR) are used (e.g. if RR = RRdead then cRR= RRalive). However the latter are not the main limitations of the use of the RR in clinical practice, but rather the former is the critical issue and these have led to heated discussions on Frank Harrell’s blog with Huitfeldt.

I interpret this paragraph to state that you consider baseline risk invariance to be the crucial consideration for choice of effect measure. I want to ask whether you agree or disagree with any of these statements:

- Regardless of which scale is used to measure the effect, it is always possible for the effect of treatment to vary between groups that have different baseline risk. This is true for OR, RR, cRR, RD, etc.

- Therefore, “invariance to baseline risk” is not a mathematical property of any effect measure. Trying to prove that an effect measure is mathematically known to be invariant to baseline risk (using mathematical logic alone), will always be futile. Given that mathematically guaranteed invariance to baseline risk is impossible, this is not a useable when choosing between effect measures.

- It is true that for some effect measures, invariance to baseline risk is impossible. For example, if RR among women is 2, and the baseline risk among men is 0.6, then it is not possible for the RR to take the same value in men and women. The phenomenon where invariance to baseline risk is sometimes impossible, corresponds exactly to what we call “non-closure”.

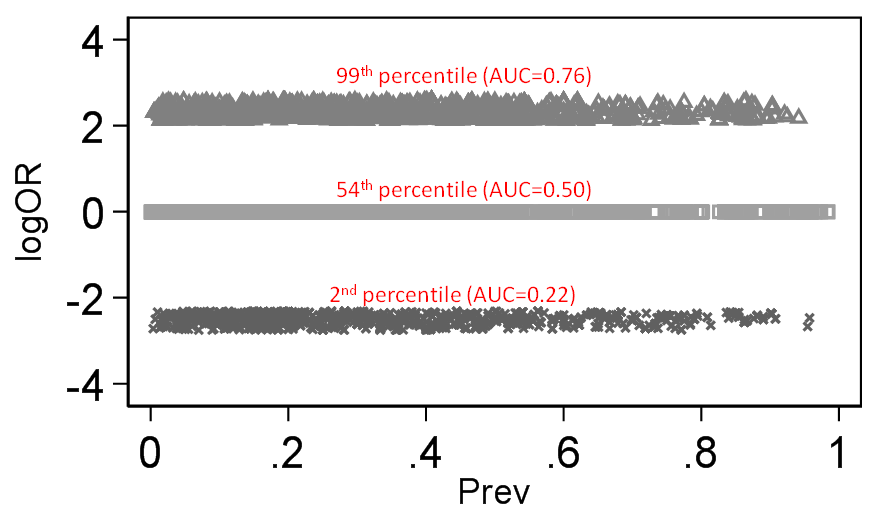

- The odds ratio and the switch relative risk are both closed

Given that the RR is a ratio of two probabilities and so too is the likelihood ratio (used in diagnostic studies), we have shown that the RR can also be interpreted as a likelihood ratio (LR)[6].

This is uncontested, but I am genuinely puzzled about why you keep bringing up this trivial result, which is not relevant to the discussion.

This interpretation holds when we consider a binary outcome to be a test of the treatment status

Why do you think it is useful to consider a binary outcome as a test of treatment status? Do you think it is clinically useful to determine whether a patient has a disease, for the purpose of inferring probabilistically whether he is likely to have been treated? Or is there some other rationale behind this?

The simple solution proposed by Huitfeldt[2] (and attributed to Sheps) of using the survival ratio was also thought intuitive by us[7] at some point before we realized that this does not work because neither the RR nor cRR are independent of baseline risk1.

If you require that an effect measure can only be useful to the extent that there is a mathematical guarantee of invariance to baseline risk, then no effect measure will meet your requirements, not even your cherished OR. If this is the case, I would sincerely suggest you either go fully non-parametric or alternatively conclude that statistical inference is an impossibility.

There is no point in continuing this conversation if you keep on requiring that the switch relative risk satisfies an impossible requirement, one that is not even satisfied by your preferred effect measure.

This is exactly what Sheps said in her 1959 paper[8] - “Unfortunately the value of the [RR] has no predictable relation to the value of [cRR] …… and depends greatly on the magnitude of [baseline risk]”. Sheps approach therefore does nothing to resolve any of the theoretical problems with the RR or its complement.

First of all, if you want to have a good faith discussion about this, please stop with the selective editing immediately. What this part of Sheps’s paper establishes, is that there is no one-to-one relationship between RR and cRR. Further, that ifyou have a constant RR, then cRR is greatly dependent on baseline risk. Similarly, if you have a constant cRR then RR depends on baseline risk. This is a relational statement about what we can infer about one effect measure if another effect measure is constant.

In fact, for any two non-equivalent effect measures, it will be true that if a (non-null) effect is stable on one scale, then the effect on the other scale will depend on the baseline risk: I can write RR as a function of RR, and it will have a term for baseline risk. But similarly, I can write OR as a function of RR, and it will also have a term for baseline risk. This relative baseline dependence is symmetric, and gives no reason to prefer one effect measure over the other. This line of reasoning is only interesting if you have some reason to assign priority to one of the scales, based on some kind of background knowledge that the effect is stable on that scale.

This follows logically from the fact that these measures, contrary to Huitfeldt, must be different in different groups defined by baseline risk irrespective of any assumptions about biological mechanisms.

Consider a high quality large randomized trial, which finds that the relative risk (for the effectiveness outcome) is is equal between men and women, who have different baseline risks. Are you saying this is theoretically impossible? If I find a study in NEJM or Lancet where there is no such difference, does it disprove your theoretical argument? If not, what does your theoretical argument even mean? Does it rule out any possible future observations?

My impression of what is being done here is that Huitfeldt would like us to ignore the mathematical properties of the ratio and instead believe that some esoteric biological mechanism must be considered to be at play that serves to make the ratio independent from baseline risk. The implication therefore is that non-independence (from baseline risk) must not be faulted on the ratios mathematical properties but on the user who does not understand how biology works. This, of course is all contrary to what Sheps proposed[8].

I am not asking you to ignore any “mathematical properties”. I am telling you that what you are asking for (“mathematically guaranteed baseline risk invariance”) is not a criterion that can be met by any effect measure, and therefore a red herring. Moreover, I am telling you that given the best insights we have from toxicology about how to model mechanism of action, and given the best insights we have from psychology and philosophy about how to generalize causal effects, the most rational choice is often to start from a simplified biological (rather than mathematical) model that implies stability of the switch relative risk, and then think about all the possible reasons that this biolological model could go wrong:

- Is switch prevalence correlated with baseline risk?

- Does switch prevalence differ between segments of the population?

- Does the drug have non-monotonic effects?

Depending on our views about the plausibility of these threats to validity, we can then make informed choices about whether there is any point at all in going forward with the analysis, and if so, whether there is a need for interaction terms or subgroup analysis, whether we can get point identification or have to settle for partial identification/bounds, whether we need a sensitivity analysis, etc.

I will conclude by saying that the RR and cRR are best interpreted as likelihood ratios and therefore need to be combined for their use as effect measures. The ratio RR/cRR = odds ratio and the latter itself is a likelihood ratio of a different type that connects risk under no-treatment to risk under treatment[6].

This is a complete non sequitur. You have still maybe absolutely no attempt to explain why “interpretation” matters when the actual math is invariant to interpretation, nor why the interpretation as a likelihood ratio prohibits its use as an effect measure, or why the odds ratio is required in order to “connect” risk under treatment to risk under no treatment.