Following up on citations to that arXiv paper lead me to a valuable series of relatively recent papers that discusses this effect measure issue from a public health and program evaluation perspective that will clarify why collapsible effect measures are important in this application.

From the author reply, they make the following claim:

- The odds ratio is not a parameter of interest in epidemiology and public health research. 5 Instead, the relative risk and risk difference are two often-used effect measures that are of interest. Both of them are collapsible. Directly modeling these two measures6,7 eliminates the noncollapsibility matter entirely.

They cite the following paper by Sander:

Greenland, S. (1987). Interpretation and choice of effect measures in epidemiologic analyses. American journal of epidemiology, 125(5), 761-768. (PDF)

I will argue only incidence differences and ratios possess direct interpretations as measures of impact on average risk or hazard … logistic and log-linear models are useful only insofar as they provide improved (smoothed) incidence differences or ratios

Would you mind if I started a new thread, similar to the wiki-style Myths thread, that summarizes the areas of agreement in this one, along with references to the relevant literature? I think it is time to put the relevant issues – higher level clinical questions and the statistical modelling and reporting, in a decision theoretic framework.

After following up on a number of citations, I get the feeling that researchers are still hampered by traditions that were somewhat reasonable given the space and computational constraints of the past, but are not aware that we can do much better with modern computing power.

Relevant Reading:

Greenland, S., & Pearce, N. (2015). Statistical foundations for model-based adjustments. Annual review of public health, 36, 89-108. (PDF)

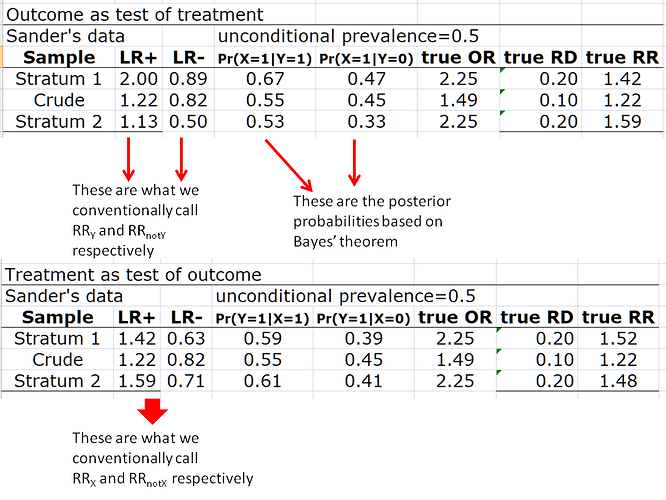

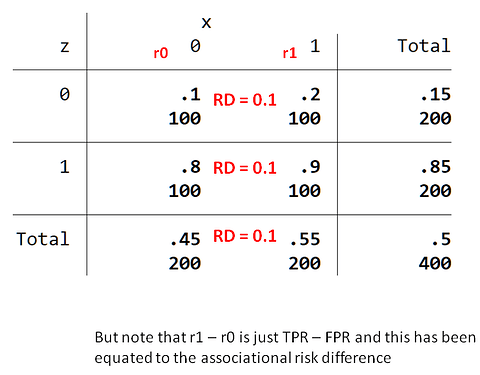

Here is a recently updated version summary (March 2023) of the @AndersHuitfeldt paper on the switch risk ratio.

For a statistical justification of the switch (causal) relative risk, the following is worth study:

Van Der Laan, M. J., Hubbard, A., & Jewell, N. P. (2007). Estimation of treatment effects in randomized trials with non‐compliance and a dichotomous outcome. Journal of the Royal Statistical Society: Series B (Statistical Methodology), 69(3), 463-482. https://rss.onlinelibrary.wiley.com/doi/abs/10.1111/j.1467-9868.2007.00598.x

We will show that the switch causal relative risk can be directly modelled in terms of (e.g.) a logistic model, so that the concerns expressed above for modelling the causal relative risk [ needing to correctly specify nuisance parameters] do not apply to this parameter.