The age-old NIH requirement was never quite appropriate because of the reason you mentioned Paul. This cannot be done reliably without borrowing of information, e.g., by putting a prior distribution on the interaction effect to assume that the response to tx for males is more like that for females than not. This Bayesian idea of having interactions “half in” and “half out” of the model is discussed well here.

I owe my OR love to @f2harrell and most of my day to day work being focused on whether or not HTE exists (as applied to systematic reviews). Will definitely be referring to this/adapting some of these visualizations for my own work.

it’s off topic ie not binary outcomes, but i wonder how one evaluates HTE with a rank-based composite. I’m not sure i’ve seen much literature on that, especially if you want to power on the interaction. In the social science literature they talk about “the adjusted rank transform test”. I’m not familiar with it and wondered if anyone had experience with this, maybe the probability index is used for the visual display and simulations for power estimation at the design stage… power est for composites seem tenuous anyway

Use the same methods as in the blog article but applied to a semiparametric ordinal regression model such as the proportional odds model (generalization of the Wilcoxon test).

I really want to present this blog to my journal club. Do you have publications (clinical and also statistical) that look at HTE for the odds ratio?

There is this mega thread on the issue with lots of references:

I believe that the best over-arching approach is to develop a model that reliably estimates risk for individual patients then to use this model to estimate differences in risks for individuals. The model will typically be stated in terms of log odds ratios because this most often leads to the simplest model that fits, i.e., doesn’t require interactions to rescue lack of fit.

These graphs are fantastic.

-

Frank, could one say you used the G-formula to produce these plots?

-

Is anyone aware of a published RCT with at least one of these plots?

I don’t know. I thought this was simpler than the G-formula. If not, then perhaps I understand the G-formula better than I thought I did.

Sounds like what you did is what the authors described as “Method 1: marginal standardization” in this article.

What I was describing was anti-marginalization.

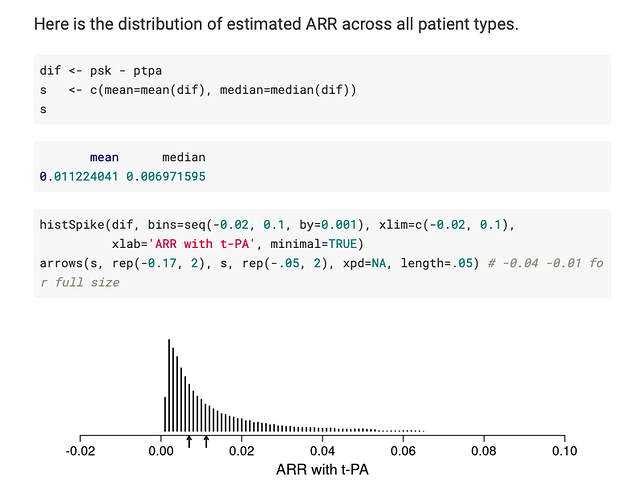

Good point! G-computation requires one to calculate the mean, as you depicted with an arrow in this plot:

Is there a technical term for showing the whole distribution?

P.S: Here is an R code example of proper g-computation (adapted from here).

fit <- glm(y ~ t, data = data, family = binomial())

#Estimate potential outcomes under treatment

data$t <- 1

pred1 <- predict(fit, newdata = data, type = "response")

#Estimate potential outcomes under control

data$t <- 0

pred0 <- predict(fit, newdata = data, type = "response")

#Compute risk difference

mean(pred1) - mean(pred0)

I don’t know a concise technical term for showing the whole distribution. To avoid being serious for a moment terms like “honesty” and “full disclosure” come to mind but we need some shorter version of “maintaining full conditioning to recognize that at least one effect measure must be covariate-dependent when the risk factor is not ignorable.”

Dr. Harrell,

The developer of R package {marginaleffects} is interested in posting a case-study about one of your posts on the distribution of risk difference. Do you allow it? With proper reference to your work, of course.

More details about this inquiry here:

No problem with that.

@f2harrell I enjoyed reading this related paper by you and colleagues: A tutorial on individualized treatment effect prediction from randomized trials with a binary endpoint. The attached R code is very insightful.

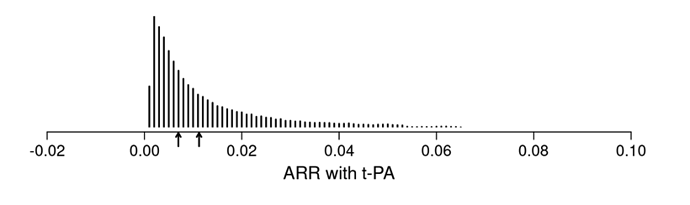

I want to double check with you that the first plot below (from your article’s Figure 4) corresponds to the estimated ARR distribution in your blog post (second plot below).

Please note I am not referring to the underlying logistic regression model. Instead, I refer to computing the predicted probability of an outcome for each observed row of the data in two counterfactual cases: when treatment is “tx==0” and when treatment is “tx==1”. Then, computing the differences between these two sets of predictions.

first plot (from article)

second plot (from blog post)

I think that is correct.

This is an older discussion on the blog article page, from the previous web platform for the blog, from 2019.

Ewout Steyerberg: Great illustration of detailed modeling in a large-scale trial!

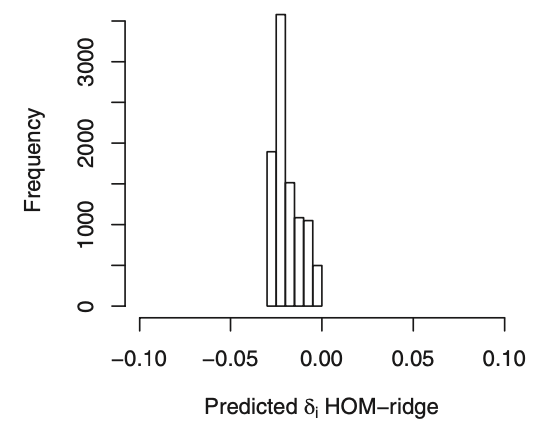

One question on the choice of penalty. The AIC seems to be optimal with penalty going to infinity, so no interactions? What is the basis for the choice of penalty = 30000:

h <- update(g, penalty=list(simple=0, interaction=30000), ...)

Is this merely for illustration of what penalized interactions would do?

Frank Harrell: Thanks Ewout. Yes by AIC the optimum penalty is infinite. I overrode this to lambda=30000 to give the benefit of the doubt to differential treatment effect. It would be interesting to see in a Bayesian analysis would result in the same narrow distribution for ORs.

@f2harrell have you explored these distributions of ARRs in the Bayesian context? In this case, one would have a posterior distribution for each ARR, which is quite difficult to visualize/summarize.

I know @BenYAndrew has nicely developed a shiny app to show each patient-specific ARR separarely. Bayesian Modeling of RCTs to Estimate Patient-Specific Efficacy