I have been periodically discussing the state of RCT in critical care. While there have been but few on-line comments, I have been encouraged by the off-line comments and approvals of that which I am trying to teach here. This work comes from 40 years in the ICU watching the evolution of the science and I speak primarily to the young who may still be developing an overarching cognitive construct of critical care science.

This is the evolution I observed over 40yrs:

-

The emergence of “threshold decision making” in the 1980s, The threshold approach to clinical decision making - PubMed

-

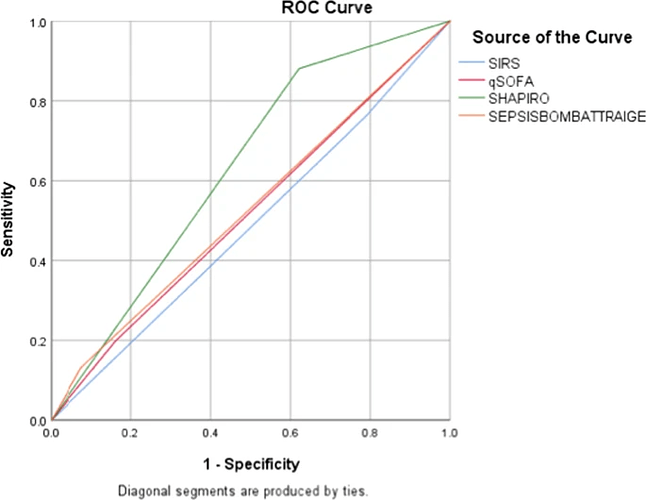

The emergence of “Consensus Syndromes” defined by guessed threshold sets like SIRS and SOFA (e.g Sepsis , ARDS, Sleep Apnea) in the 1980-90s,

-

Non reproducibility of RCT applied to most of the above “syndromes”

-

The progressive protocolization of the medical care of the consensus syndromes since the turn of the century (often despite non-reproducibility of RCT).

Without this perspective it would be hard to see how all of this happened. After all, critical care syndromes seem so scientific on the surface. However, while there was much evidence that the science, mathematically embellished by statistics, was poorly productive, it was not till the Pandemic that the dangers of the weak science was exposed. Here is a paper discussing the evolution of thought back from the idea that severe COVID Pneumonia was the “syndrome” Acute Respiratory Distress Syndrome (ARDS). You see the authors struggle with how to present the discovery that treatment effective for ARDS is not effective for sever COVID pneumonia which meets the consensus criteria for ARDS.

Reading the discussion is like rewinding 30 years of the evolution of the science which pinned together 1, 2 , 3 & 4 above. Its strange that scientists think their consensus automatically applies to some future disease. Yet virtually all did. Scientists who were taught these syndromes were real cannot process the concept that a disease which meets the criteria for the syndrome is not in the syndrome. It produces cognitive dissidence. Not even the Lexicon works anymore. Is it ARDS or not? A silly question really, but one that makes sense to them in their made up world of consensus syndromes.

Every mistake in medical science has a companion mathematical mistake. The language of medical science is math. The construct of the human time matrix is comprised of time series of mathematical signals.

Here you see an alternative argument against critical care syndromes derived from the same problem but this is a more technical problem of the critical care syndrome construct.

This argument was made before the COVID pandemic and, while still valid, the argument is transcended by the evidence derived from the pandemic that the 1990s consensus syndromes themselves and their mathematical embellishments are the problem.

For critical care science we stand at a pivotal point. Yet, are the older thought leaders and their acolytes prepared to openly introspect? Imagine standing there and suddenly realizing at age 62 yrs that the geocentric model you have taught your students and studied for 30 yrs is not valid. That’s why this is an opportunity for the young to escape the paradigm generated by their thought leaders when those thought leaders were young. Its your turn now. Time to make your own path.

This is the end of ARDS. Everyone knows severe COVID pneumonia cannot be combined in for an RCT with all the other traditional ARDS cases. its not a cognitive leap to realize that ARDS as defined by the criteria is not a valid pathophysiologic entity which will stand the test of time as it exists today.

If the pandemic precipitates new thinking and open reconsideration of the fundamental dogma of critical care, we can expect a revolution in critical care science. If not we can anticipate another decade of thought leaders who secretly do not really believe the syndrome dogma they teach.

If you are a young scientist or mathematician observing all of this, this is your opportunity. In the translated words of Louis Pasture “In the fields of observation, chance favors only the prepared mind.”

Today, after this pandemic, in critical care science, fortune favors the bold youth.